import pandas as pd

import numpy as np

import statsmodels.formula.api as smf

import seaborn as sns

import matplotlib.pyplot as plt

import statsmodels.api as sm

from scipy import stats

from sklearn.model_selection import cross_val_predict

from patsy import dmatrices

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error7 Potential issues

Read section 3.3.3 (4, 5, & 6) of the book before using these notes.

Note that in this course, lecture notes are not sufficient, you must read the book for better understanding. Lecture notes are just implementing the concepts of the book on a dataset, but not explaining the concepts elaborately.

Let us continue with the car price prediction example from the previous chapter.

trainf = pd.read_csv('./Datasets/Car_features_train.csv')

trainp = pd.read_csv('./Datasets/Car_prices_train.csv')

testf = pd.read_csv('./Datasets/Car_features_test.csv')

testp = pd.read_csv('./Datasets/Car_prices_test.csv')

train = pd.merge(trainf,trainp)

train.head()| carID | brand | model | year | transmission | mileage | fuelType | tax | mpg | engineSize | price | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 18473 | bmw | 6 Series | 2020 | Semi-Auto | 11 | Diesel | 145 | 53.3282 | 3.0 | 37980 |

| 1 | 15064 | bmw | 6 Series | 2019 | Semi-Auto | 10813 | Diesel | 145 | 53.0430 | 3.0 | 33980 |

| 2 | 18268 | bmw | 6 Series | 2020 | Semi-Auto | 6 | Diesel | 145 | 53.4379 | 3.0 | 36850 |

| 3 | 18480 | bmw | 6 Series | 2017 | Semi-Auto | 18895 | Diesel | 145 | 51.5140 | 3.0 | 25998 |

| 4 | 18492 | bmw | 6 Series | 2015 | Automatic | 62953 | Diesel | 160 | 51.4903 | 3.0 | 18990 |

# Considering the model developed to address assumptions in the previous chapter

# Model with an interaction term and a variable transformation term

ols_object = smf.ols(formula = 'np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | np.log(price) | R-squared: | 0.803 |

| Model: | OLS | Adj. R-squared: | 0.803 |

| Method: | Least Squares | F-statistic: | 1834. |

| Date: | Sun, 10 Mar 2024 | Prob (F-statistic): | 0.00 |

| Time: | 16:51:01 | Log-Likelihood: | -1173.8 |

| No. Observations: | 4960 | AIC: | 2372. |

| Df Residuals: | 4948 | BIC: | 2450. |

| Df Model: | 11 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | -238.2125 | 25.790 | -9.237 | 0.000 | -288.773 | -187.652 |

| year | 0.1227 | 0.013 | 9.608 | 0.000 | 0.098 | 0.148 |

| engineSize | 13.8349 | 5.795 | 2.387 | 0.017 | 2.475 | 25.195 |

| mileage | 0.0005 | 0.000 | 3.837 | 0.000 | 0.000 | 0.001 |

| mpg | -1.2446 | 0.345 | -3.610 | 0.000 | -1.921 | -0.569 |

| year:engineSize | -0.0067 | 0.003 | -2.324 | 0.020 | -0.012 | -0.001 |

| year:mileage | -2.67e-07 | 6.8e-08 | -3.923 | 0.000 | -4e-07 | -1.34e-07 |

| year:mpg | 0.0006 | 0.000 | 3.591 | 0.000 | 0.000 | 0.001 |

| engineSize:mileage | -2.668e-07 | 4.08e-07 | -0.654 | 0.513 | -1.07e-06 | 5.33e-07 |

| engineSize:mpg | 0.0028 | 0.000 | 6.842 | 0.000 | 0.002 | 0.004 |

| mileage:mpg | 7.235e-08 | 1.79e-08 | 4.036 | 0.000 | 3.72e-08 | 1.08e-07 |

| I(mileage ** 2) | 1.828e-11 | 5.64e-12 | 3.240 | 0.001 | 7.22e-12 | 2.93e-11 |

| Omnibus: | 711.514 | Durbin-Watson: | 0.498 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 2545.807 |

| Skew: | 0.699 | Prob(JB): | 0.00 |

| Kurtosis: | 6.220 | Cond. No. | 1.73e+13 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.73e+13. This might indicate that there are

strong multicollinearity or other numerical problems.

#Computing RMSE on test data

pred_price_log = model_log.predict(testf)

np.sqrt(((testp.price - np.exp(pred_price_log))**2).mean())9094.2094732329667.1 Outliers

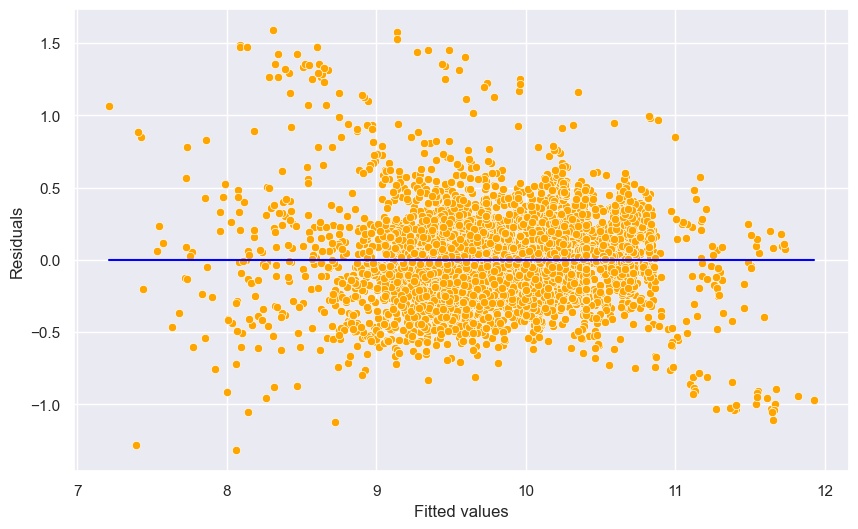

An outlier is a point for which the true response (\(y_i\)) is far from the value predicted by the model. Residual plots can be used to identify outliers.

If the the response at the \(i^{th}\) observation is \(y_i\), the prediction is \(\hat{y}_i\), then the residual \(e_i\) is:

\[e_i = y_i - \hat{y_i}\]

#Plotting residuals vs fitted values

sns.set(rc={'figure.figsize':(10,6)})

sns.scatterplot(x = (model_log.fittedvalues), y=(model_log.resid),color = 'orange')

sns.lineplot(x = [model_log.fittedvalues.min(),model_log.fittedvalues.max()],y = [0,0],color = 'blue')

plt.xlabel('Fitted values')

plt.ylabel('Residuals');

Some of the errors may be high. However, it is difficult to decide how large a residual needs to be before we can consider a point to be an outlier. To address this problem, we have standardized residuals, which are defined as:

\[r_i = \frac{e_i}{RSE(\sqrt{1-h_{ii}})},\] where \(r_i\) is the standardized residual, \(RSE\) is the residual standard error, and \(h_{ii}\) is the leverage (introduced in the next section) of the \(i^{th}\) observation.

Standardized residuals, allow the residuals to be compared on a standard scale.

Issue with standardized residuals:, If the observation corresponding to the standardized residual has a high leverage, then it will drag the regression line / plane / hyperplane towards it, thereby influencing the estimate of the residual itself.

Studentized residuals: To address the issue with standardized residuals, studentized residual for the \(i^{th}\) observation is computed as the standardized residual, but with the \(RSE\) (residual standard error) computed after removing the \(i^{th}\) observation from the data. Studentized residual, \(t_i\) for the \(i^{th}\) observation is given as:

\[t_i = \frac{e_i}{RSE_{i}(\sqrt{1-h_{ii}})},\] where \(RSE_{i}\) is the residual standard error of the model developed on the data without the \(i^{th}\) observation.

Distribution of studentized residuals: If the regression model is appropriate such that no case is outlying because of a change in the model, then each studentized residual will follow a \(t\) distribution with \((n–p–1)\) degrees of freedom.

As the studentized residuals follow a \(t\) distribution, we can conduct a hypothesis test to identify whether an observation is an outlier or not for a given sigificance level. Note that the test will be two-sided since we are not concerned with the sign of the residuals, but only their absolute values.

In the current example, for a signficance level of 5%, the critical \(t\)-statistic is \(t\big(1 - \frac{\alpha}{2}, n - p - 1\big)\), as calculated below.

n = train.shape[0]

p = model_log.df_model

alpha = 0.05

# Critical value

stats.t.ppf(1 - alpha/2, n - p - 1)1.9604435402730618If we were conducting the test for a single observation, we’ll compare the studentized residual for that observation with the critical \(t\)-statistic, and if the residual is greater than the critical value, we’ll consider that observation as an outlier.

However, typically, we’ll be interested in conducting this test for all observations, and thus we’ll need a more conservative critical value for the same signficance level. This critical value is given by the Bonferroni correction as \(t\big(1 - \frac{\alpha}{2n}, n - p - 1\big)\).

Thus, the minimum value of studentized residual for which the observation will be classified as an outlier is:

critical_value = stats.t.ppf(1-alpha/(2*n), n - p - 1)

critical_value4.4200129981725365The studentized residuals can be obtained using the outlier_test() method of the object returned by the fit() method of an OLS object. Let us find the studentized residuals in our car price prediction model.

#Studentized residuals

out = model_log.outlier_test()

out| student_resid | unadj_p | bonf(p) | |

|---|---|---|---|

| 0 | -1.164204 | 0.244398 | 1.0 |

| 1 | -0.801879 | 0.422661 | 1.0 |

| 2 | -1.263820 | 0.206354 | 1.0 |

| 3 | -0.614171 | 0.539131 | 1.0 |

| 4 | 0.027929 | 0.977720 | 1.0 |

| ... | ... | ... | ... |

| 4955 | -0.523361 | 0.600747 | 1.0 |

| 4956 | -0.509538 | 0.610398 | 1.0 |

| 4957 | -1.718808 | 0.085712 | 1.0 |

| 4958 | -0.077594 | 0.938154 | 1.0 |

| 4959 | -0.482388 | 0.629551 | 1.0 |

4960 rows × 3 columns

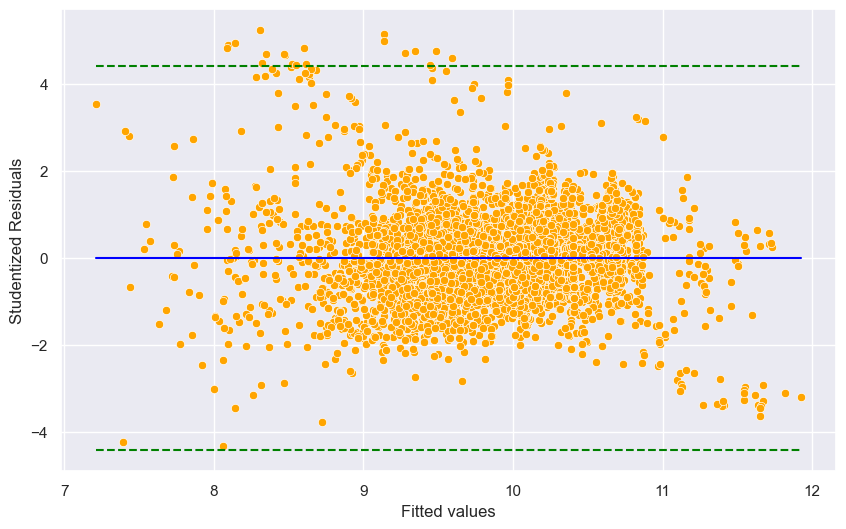

Studentized residuals are in the first column of the above table. Let us plot the studentized residuals against fitted values. In the figure below, the studentized residuals above the top dotted green line and below the bottom dotted green line are outliers.

#Plotting studentized residuals vs fitted values

sns.scatterplot(x = (model_log.fittedvalues), y=(out.student_resid),color = 'orange')

sns.lineplot(x = [model_log.fittedvalues.min(),model_log.fittedvalues.max()],y = [0,0],color = 'blue')

ax = sns.lineplot(x = [model_log.fittedvalues.min(),model_log.fittedvalues.max()],y = [critical_value, critical_value],

color = 'green')

sns.lineplot(x = [model_log.fittedvalues.min(),model_log.fittedvalues.max()],y = [-critical_value, -critical_value],

color = 'green')

ax.lines[1].set_linestyle("--")

ax.lines[2].set_linestyle("--")

plt.xlabel('Fitted values')

plt.ylabel('Studentized Residuals');

Outliers: Observations whose studentized residuals have a magnitude greater than \(t\big(1 - \frac{\alpha}{2n}, n - p - 1\big)\).

Impact of outliers: Outliers do not have a large impact on the OLS line / plane / hyperplane as long as they don’t have a high leverage (discussed in the next section). However, outliers do inflate the residual standard error (RSE). RSE in turn is used to compute the standard errors of regression coefficients. As a result, statistically significant variables may appear to be insignificant, and \(R^2\) may appear to be lower.

Are there outliers in our example?

#Number of points with absolute studentized residuals greater than critical_value

np.sum(np.abs(out.student_resid) > critical_value)19Let us analyze the outliers.

ind = (np.abs(out.student_resid) > critical_value)

pd.concat([train.loc[ind,:], np.exp(model_log.fittedvalues[ind])], axis = 1)| carID | brand | model | year | transmission | mileage | fuelType | tax | mpg | engineSize | price | 0 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2042 | 18228 | bmw | i3 | 2017 | Automatic | 24041 | Hybrid | 0 | 78.2726 | 0.0 | 21495 | 5537.337460 |

| 2046 | 17362 | bmw | i3 | 2016 | Automatic | 68000 | Hybrid | 0 | 78.0258 | 0.0 | 15990 | 4107.811771 |

| 2050 | 19224 | bmw | i3 | 2016 | Automatic | 20013 | Hybrid | 0 | 77.9310 | 0.0 | 19875 | 4784.986021 |

| 2051 | 13913 | bmw | i3 | 2014 | Automatic | 34539 | Hybrid | 0 | 78.3838 | 0.0 | 14495 | 3269.686113 |

| 2055 | 16512 | bmw | i3 | 2017 | Automatic | 28169 | Hybrid | 0 | 77.9799 | 0.0 | 23751 | 5454.207333 |

| 2059 | 15844 | bmw | i3 | 2016 | Automatic | 19995 | Hybrid | 0 | 78.2825 | 0.0 | 19850 | 4773.707307 |

| 2060 | 12107 | bmw | i3 | 2016 | Automatic | 8421 | Hybrid | 0 | 77.9125 | 0.0 | 19490 | 5028.048305 |

| 2061 | 18215 | bmw | i3 | 2014 | Automatic | 37161 | Hybrid | 0 | 77.7505 | 0.0 | 14182 | 3259.101789 |

| 2063 | 15617 | bmw | i3 | 2017 | Automatic | 41949 | Hybrid | 140 | 78.1907 | 0.0 | 19998 | 5173.402125 |

| 2064 | 18020 | bmw | i3 | 2015 | Automatic | 9886 | Hybrid | 0 | 78.1810 | 0.0 | 17481 | 4214.053932 |

| 2143 | 12972 | bmw | i8 | 2017 | Automatic | 9992 | Hybrid | 135 | 69.2767 | 1.5 | 59950 | 14675.519883 |

| 2144 | 13826 | bmw | i8 | 2015 | Automatic | 43323 | Hybrid | 0 | 69.2683 | 1.5 | 44990 | 9289.744847 |

| 2150 | 18949 | bmw | i8 | 2015 | Automatic | 43102 | Hybrid | 0 | 69.0922 | 1.5 | 42890 | 9300.576839 |

| 2151 | 18977 | bmw | i8 | 2016 | Automatic | 10087 | Hybrid | 0 | 68.9279 | 1.5 | 48998 | 12607.867130 |

| 2744 | 18866 | merc | M Class | 2004 | Automatic | 121000 | Diesel | 325 | 29.3713 | 2.7 | 19950 | 4068.883513 |

| 3548 | 13149 | audi | S4 | 2019 | Automatic | 4900 | Diesel | 145 | 40.7030 | 0.0 | 45000 | 10679.644966 |

| 4116 | 16420 | audi | SQ5 | 2020 | Automatic | 1500 | Diesel | 145 | 34.7968 | 0.0 | 56450 | 13166.374034 |

| 4117 | 17611 | audi | SQ5 | 2019 | Automatic | 1500 | Diesel | 145 | 34.5016 | 0.0 | 48800 | 11426.005642 |

| 4851 | 16577 | bmw | Z3 | 2002 | Automatic | 16500 | Petrol | 325 | 29.7614 | 2.2 | 14995 | 3426.196256 |

Do you notice some unique characteristics of these observations due to which they may be outliers?

What methods you can propose to estimate the price of these outliers more accurately, which will also result in the overall reduction in RMSE?

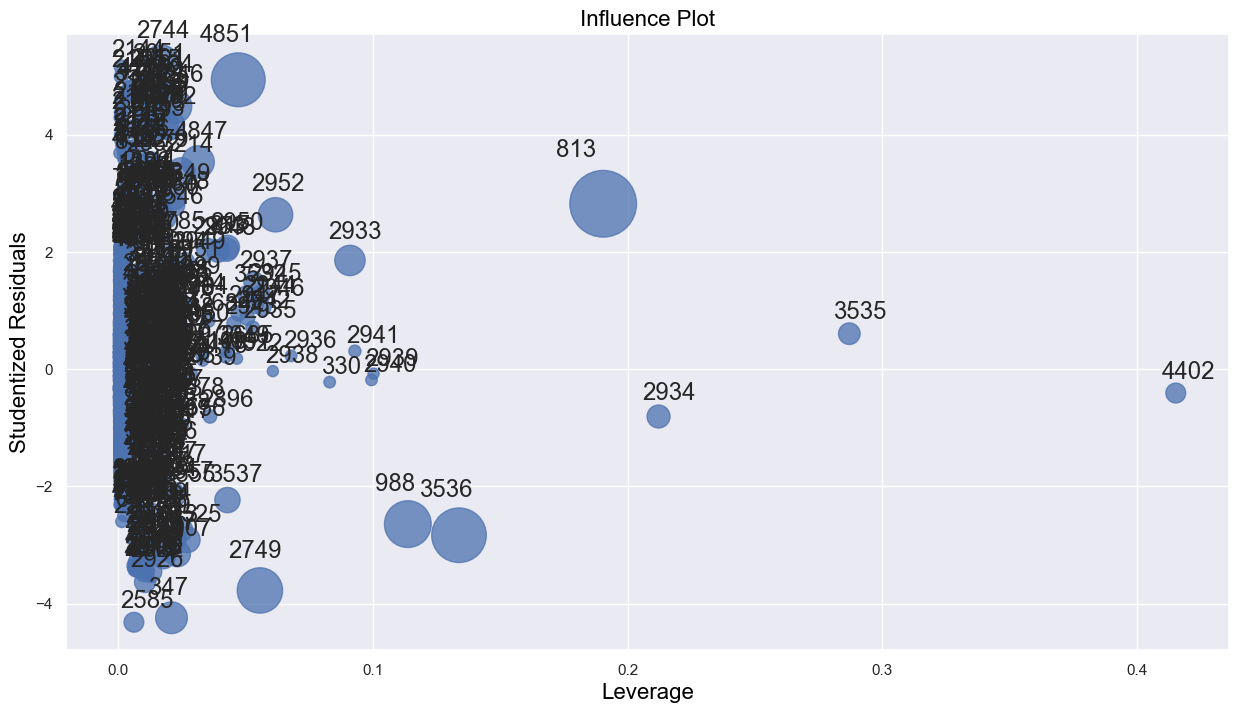

7.2 High leverage points

High leverage points are those with an unsual value of the predictor(s). They have the potential to have a relatively higher impact on the OLS line / plane / hyperplane, as compared to the outliers.

Leverage statistic (page 99 of the book): In order to quantify an observation’s leverage, we compute the leverage statistic. A large value of this statistic indicates an observation with high leverage. For simple linear regression, \[\begin{equation} h_i = \frac{1}{n} + \frac{(x_i - \bar x)^2}{\sum_{i'=1}^{n}(x_{i'} - \bar x)^2}. \end{equation}\]

It is clear from this equation that \(h_i\) increases with the distance of \(x_i\) from \(\bar x\). A large value of \(h_i\) indicates that the \(i^{th}\) observation is distance from the center of all the other observations in terms of predictor values.

The leverage statistic \(h_i\) is always between \(1/n\) and \(1\), and the average leverage for all the observations is always equal to \((p+1)/n\):

\[\begin{equation} \bar{h} = \frac{p+1}{n} \end{equation}\]

So if a given observation has a leverage statistic that greatly exceeds \((p+1)/n\), then we may suspect that the corresponding point has high leverage.

If the \(i^{th}\) observation has a large leverage \(h_i\), it may exercise substantial leverage in determining the fitted value \(\hat{Y}_i\), because:

The fitted value \(\hat{Y}_i\) is a linear combination of the observed \(Y\) values, and \(h_i\) is the weight of observation \(Y_i\) in determining this fitted value.

The larger the \(h_i\), the smaller is the variance of the residual \(e_i\), and the closer the fitted value \(\hat{Y}_i\) will tend to be the observed value \(Y_i\).

Thumb rules:

- A leverage \(h_i\) is usually considered large if it is more than twice as large as the mean value \(\bar{h}\).

- Another suggested guideline is that \(h_i\) values exceeding 0.5 indicate very high leverage, whereas those between 0.2 and 0.5 indicate moderate leverage.

Influential points: Note that if a high leverage point falls in line with the regression line, then it will not affect the regression line. However, it may inflate \(R\)-squared and increase the significance of predictors. If a high leverage point falls away from the regression line, then it is also an outlier, and will affect the regression line. The points whose presence significantly affects the regression line are called influential points. A point that is both a high leverage point and an outlier is likely to be an influential point. However, a high leverage point is not necessarily an influential point.

Source for influential points: https://online.stat.psu.edu/stat501/book/export/html/973

Let us see if there are any high leverage points in our regression model.

#Model with an interaction term and a variable transformation term

ols_object = smf.ols(formula = 'np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | np.log(price) | R-squared: | 0.803 |

| Model: | OLS | Adj. R-squared: | 0.803 |

| Method: | Least Squares | F-statistic: | 1834. |

| Date: | Sun, 10 Mar 2024 | Prob (F-statistic): | 0.00 |

| Time: | 16:53:39 | Log-Likelihood: | -1173.8 |

| No. Observations: | 4960 | AIC: | 2372. |

| Df Residuals: | 4948 | BIC: | 2450. |

| Df Model: | 11 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | -238.2125 | 25.790 | -9.237 | 0.000 | -288.773 | -187.652 |

| year | 0.1227 | 0.013 | 9.608 | 0.000 | 0.098 | 0.148 |

| engineSize | 13.8349 | 5.795 | 2.387 | 0.017 | 2.475 | 25.195 |

| mileage | 0.0005 | 0.000 | 3.837 | 0.000 | 0.000 | 0.001 |

| mpg | -1.2446 | 0.345 | -3.610 | 0.000 | -1.921 | -0.569 |

| year:engineSize | -0.0067 | 0.003 | -2.324 | 0.020 | -0.012 | -0.001 |

| year:mileage | -2.67e-07 | 6.8e-08 | -3.923 | 0.000 | -4e-07 | -1.34e-07 |

| year:mpg | 0.0006 | 0.000 | 3.591 | 0.000 | 0.000 | 0.001 |

| engineSize:mileage | -2.668e-07 | 4.08e-07 | -0.654 | 0.513 | -1.07e-06 | 5.33e-07 |

| engineSize:mpg | 0.0028 | 0.000 | 6.842 | 0.000 | 0.002 | 0.004 |

| mileage:mpg | 7.235e-08 | 1.79e-08 | 4.036 | 0.000 | 3.72e-08 | 1.08e-07 |

| I(mileage ** 2) | 1.828e-11 | 5.64e-12 | 3.240 | 0.001 | 7.22e-12 | 2.93e-11 |

| Omnibus: | 711.514 | Durbin-Watson: | 0.498 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 2545.807 |

| Skew: | 0.699 | Prob(JB): | 0.00 |

| Kurtosis: | 6.220 | Cond. No. | 1.73e+13 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.73e+13. This might indicate that there are

strong multicollinearity or other numerical problems.

#Computing the leverage statistic for each observation

influence = model_log.get_influence()

leverage = influence.hat_matrix_diag#Visualizng leverage against studentized residuals

sns.set(rc={'figure.figsize':(15,8)})

sm.graphics.influence_plot(model_log);

Let us identify the high leverage points in the data, as they may be affecting the regression line if they are outliers as well, i.e., if they are influential points. Note that there is no defined threshold for a point to be classified as a high leverage point. Some statisticians consider points having twice the average leverage as high leverage points, some consider points having thrice the average leverage as high leverage points, and so on.

out = model_log.outlier_test()#Average leverage of points

average_leverage = (model_log.df_model+1)/model_log.nobs

average_leverage0.0024193548387096775Let us consider points having four times the average leverage as high leverage points.

#We will remove all observations that have leverage higher than the threshold value.

high_leverage_threshold = 3*average_leverage#Number of high leverage points in the dataset

np.sum(leverage>high_leverage_threshold)2697.2.1 Identifying extrapolation using leverage

Leverage can be used to check if prediction on a particular point will lead to extrapolation.

Below is the function that can be used to find the leverage at for a particular observation xnew. Note that xnew has to be a single-dimensional array, and X has to be the predictor matrix (also called the design matrix).

def leverage_compute(xnew, X):

return(xnew.reshape(-1, 1).T.dot(np.linalg.inv(X.T.dot(X))).dot(xnew.reshape(-1, 1))[0][0])As expected, the function will return the same leverage as provided by the hat_matrix_diag attribute of the objected returned by the get_influence() method of model_log as shown below:

leverage[0]0.0026426981240353694As the observation for prediction is required we need to create the predictor matrix X to create all the observations with the interactions specified in the model.

y, X = dmatrices('np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)', data = train)leverage_compute(X[0,:], X)0.0026426973869101977If the leverage for a new observation is higher than the maximum leverage among all the observations in the training dataset, then prediction at the new observation will be extrapolation.

7.3 Influential points

Observations that are both high leverage points and outliers are influential points that may affect the regression line. Let’s remove these influential points from the data and see if it improves the model prediction accuracy on test data.

#Dropping influential points from data

train_filtered = train.drop(np.intersect1d(np.where(np.abs(out.student_resid) > critical_value)[0],

(np.where(leverage>high_leverage_threshold)[0])))Note that as the Bonferroni’s critical value is very conservative estimate, we have rounded off the critical value to 4, instead of 4.42.

train_filtered.shape(4948, 11)#Number of points removed as they were influential

train.shape[0]-train_filtered.shape[0]12We removed 12 influential data points from the training data.

#Model after removing the influential observations

ols_object = smf.ols(formula = 'np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)', data = train_filtered)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | np.log(price) | R-squared: | 0.815 |

| Model: | OLS | Adj. R-squared: | 0.814 |

| Method: | Least Squares | F-statistic: | 1971. |

| Date: | Sun, 10 Mar 2024 | Prob (F-statistic): | 0.00 |

| Time: | 16:54:08 | Log-Likelihood: | -1027.9 |

| No. Observations: | 4948 | AIC: | 2080. |

| Df Residuals: | 4936 | BIC: | 2158. |

| Df Model: | 11 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | -256.2339 | 25.421 | -10.080 | 0.000 | -306.070 | -206.398 |

| year | 0.1317 | 0.013 | 10.462 | 0.000 | 0.107 | 0.156 |

| engineSize | 18.4650 | 5.663 | 3.261 | 0.001 | 7.364 | 29.566 |

| mileage | 0.0006 | 0.000 | 4.288 | 0.000 | 0.000 | 0.001 |

| mpg | -1.1810 | 0.338 | -3.489 | 0.000 | -1.845 | -0.517 |

| year:engineSize | -0.0090 | 0.003 | -3.208 | 0.001 | -0.015 | -0.004 |

| year:mileage | -2.933e-07 | 6.7e-08 | -4.374 | 0.000 | -4.25e-07 | -1.62e-07 |

| year:mpg | 0.0006 | 0.000 | 3.458 | 0.001 | 0.000 | 0.001 |

| engineSize:mileage | -4.316e-07 | 4e-07 | -1.080 | 0.280 | -1.21e-06 | 3.52e-07 |

| engineSize:mpg | 0.0048 | 0.000 | 11.537 | 0.000 | 0.004 | 0.006 |

| mileage:mpg | 7.254e-08 | 1.75e-08 | 4.140 | 0.000 | 3.82e-08 | 1.07e-07 |

| I(mileage ** 2) | 1.668e-11 | 5.53e-12 | 3.017 | 0.003 | 5.84e-12 | 2.75e-11 |

| Omnibus: | 718.619 | Durbin-Watson: | 0.521 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 2512.509 |

| Skew: | 0.714 | Prob(JB): | 0.00 |

| Kurtosis: | 6.185 | Cond. No. | 1.75e+13 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.75e+13. This might indicate that there are

strong multicollinearity or other numerical problems.

Let us compare the square root of 5-fold cross-validated mean squared error of the model with and without the influential points.

y, X = dmatrices('np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)', data = train)

np.sqrt(mean_squared_error(np.exp(cross_val_predict(LinearRegression(), X, y)), np.exp(y)))9811.74078331643y, X = dmatrices('np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)', data = train_filtered)

np.sqrt(mean_squared_error(np.exp(cross_val_predict(LinearRegression(), X, y)), np.exp(y)))9800.202063309154Why can’t we use cross_val_score() instead of cross_val_predict() here?

There seems to be a slight improvement in prediction error after removing influential points. Note that none of the points had “very high leverage”, and thus the change is not substantial.

Note that we obtain a higher R-squared value of 81.5% as compared to 80% with the complete data. Removing the influential points helped obtain a slightly better model fit. However, that may also happen just by reducing observations.

#Computing RMSE on test data

pred_price_log = model_log.predict(testf)

np.sqrt(((testp.price - np.exp(pred_price_log))**2).mean())8922.977452912108The RMSE on test data has also reduced. This shows that some of the influential points were impacting the regression line. With those points removed, the model better captures the general trend in the data.

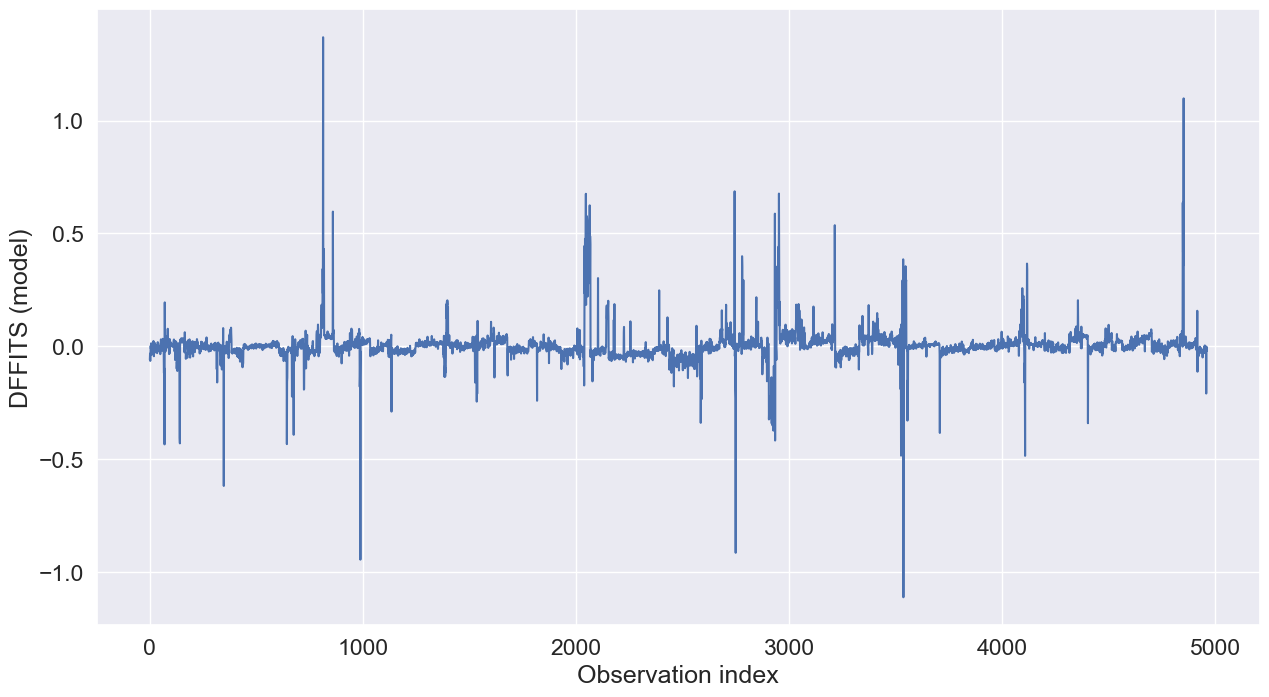

7.3.1 Influence on single fitted value (DFFITS)

- A useful measure of the influence that the \(i^{th}\) observation has on the fitted value \(\hat{Y}_i\) is:

\[\begin{equation} (DFFITS)_i = \frac{\hat{Y}_i-\hat{Y}_{i(i)}}{\sqrt{MSE_{i}h_i}} \end{equation}\]

Note that the denominator in the above fraction is the estimated standard deviation of \(\hat{Y}_i\), but uses the error mean square when the \(i^{th}\) observation is omitted.

\(DFFITS\) for the \(i^{th}\) observation represents the number of estimated standard deviations of \(\hat{Y}_i\) that the fitted value \(\hat{Y}_i\) increases or decreases with the inclusion of the \(i^{th}\) observation in fitting the regression model.

It can be shown that:

\[\begin{equation} (DFFITS)_i = t_i\sqrt{\frac{h_i}{1-h_i}} \end{equation}\]

where \(t_i\) is the studentized deleted residual for the \(i^{th}\) observation.

We can see that if an observation has high leverage and is an outlier, it is likely to be influential

For large datasets, an observation is considered influential if the magnitude of \(DFFITS\) for it exceeds \(2\sqrt{\frac{p}{n}}\)

sns.set(font_scale =1.5)

sns.lineplot(x = range(train.shape[0]), y = influence.dffits[0])

plt.xlabel('Observation index')

plt.ylabel('DFFITS (model)');

Let us analyze the point with the highest \(DFFITS\).

np.where(influence.dffits[0]>1)(array([ 813, 4851], dtype=int64),)train.loc[813,:]carID 12454

brand vw

model Caravelle

year 2012

transmission Semi-Auto

mileage 212000

fuelType Diesel

tax 325

mpg 34.4424

engineSize 2.0

price 11995

Name: 813, dtype: objecttrain.loc[train.model == ' Caravelle','mileage'].describe()count 65.000000

mean 25638.692308

std 42954.135726

min 10.000000

25% 3252.000000

50% 6900.000000

75% 30414.000000

max 212000.000000

Name: mileage, dtype: float64# Prediction with model developed based on all points

ols_object = smf.ols(formula = 'np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)',

data = train)

model_log = ols_object.fit();

np.exp(model_log.predict(train.loc[[813],:]))813 5502.647323

dtype: float64# Prediction with model developed based on all points except the 813th point

ols_object = smf.ols(formula = 'np.log(price)~(year+engineSize+mileage+mpg)**2+I(mileage**2)',

data = train.drop(index = 813))

model_log = ols_object.fit();

np.exp(model_log.predict(train.loc[[813],:]))813 4581.374593

dtype: float64Let us see the leverage and studentized residual for this observation.

# Leverage

leverage[813]0.19038697461006687# Studentized residual

out.student_resid[813]2.823478041409651Do you notice what may be contributing to the high influence of this point?

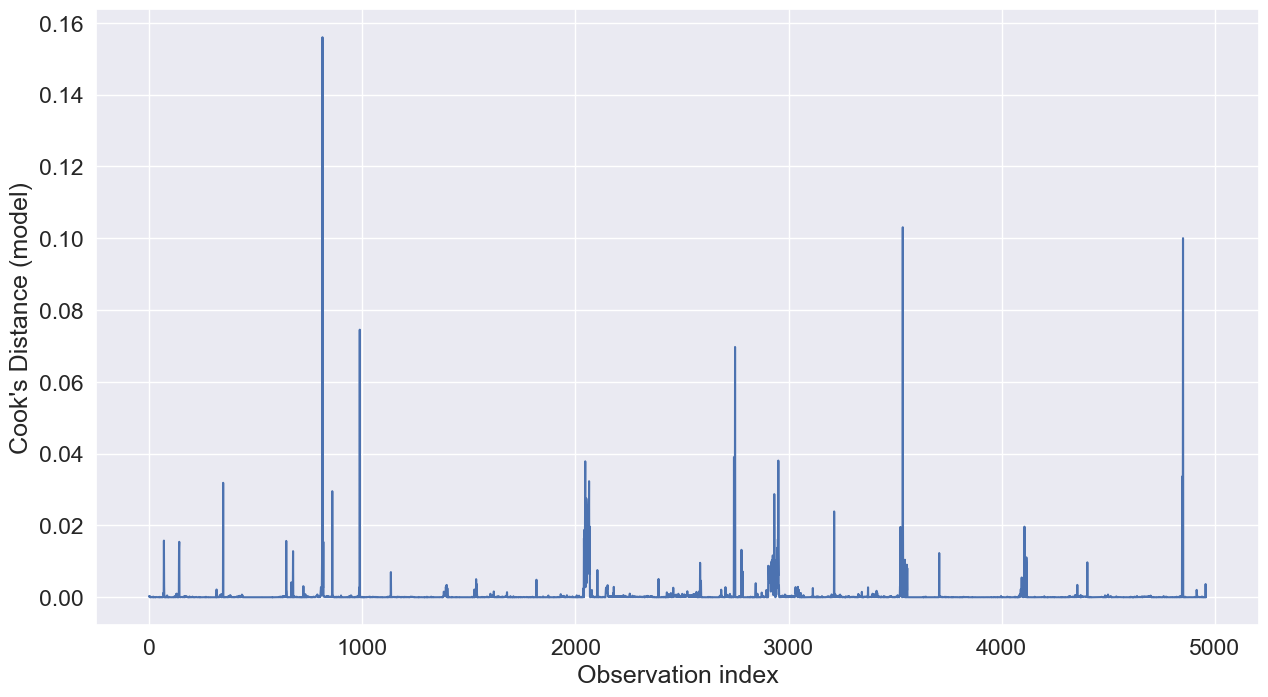

7.3.2 Influence on all fitted values (Cook’s distance)

In contrast to \(DFFITS\), which considers the influence of the \(i^{th}\) observation on the fitted value \(\hat{Y}_i\), Cook’s distance considers the influence of the \(i^{th}\) observation on all \(n\) the fitted values:

\[\begin{equation} D_i = \frac{\sum_{j=1}^{n} (\hat{Y}_j - \hat{Y}_{j(i)})^2}{pMSE} \end{equation}\]

It can be shown that:

\[\begin{equation} D_i = \frac{e_i^2}{pMSE}\bigg[\frac{h_i}{(1-h_i)^2}\bigg] \end{equation}\]

The larger \(h_i\) or \(e_i\), the larger is \(D_i\). \(D_i\) can be related to the \(F(p, n- p)\) distribution. If the percentile value is 50% or more, the observation is considered as highly influential.

Cook’s distance is considered high if it is greater than 0.5 and extreme if it is greater than 1.

sns.set(font_scale =1.5)

sns.lineplot(x = range(train.shape[0]), y = influence.cooks_distance[0])

plt.xlabel('Observation index')

plt.ylabel("Cook's Distance (model)");

# Point with the highest Cook's distance

np.where(influence.cooks_distance[0]>0.15)(array([813], dtype=int64),)The critical Cook’s distance value for a point to be highly influential in this dataset is:

stats.f.ppf(0.5, 11, 4949)0.9402181103263811Thus, we don’t have any highly influential points in the dataset.

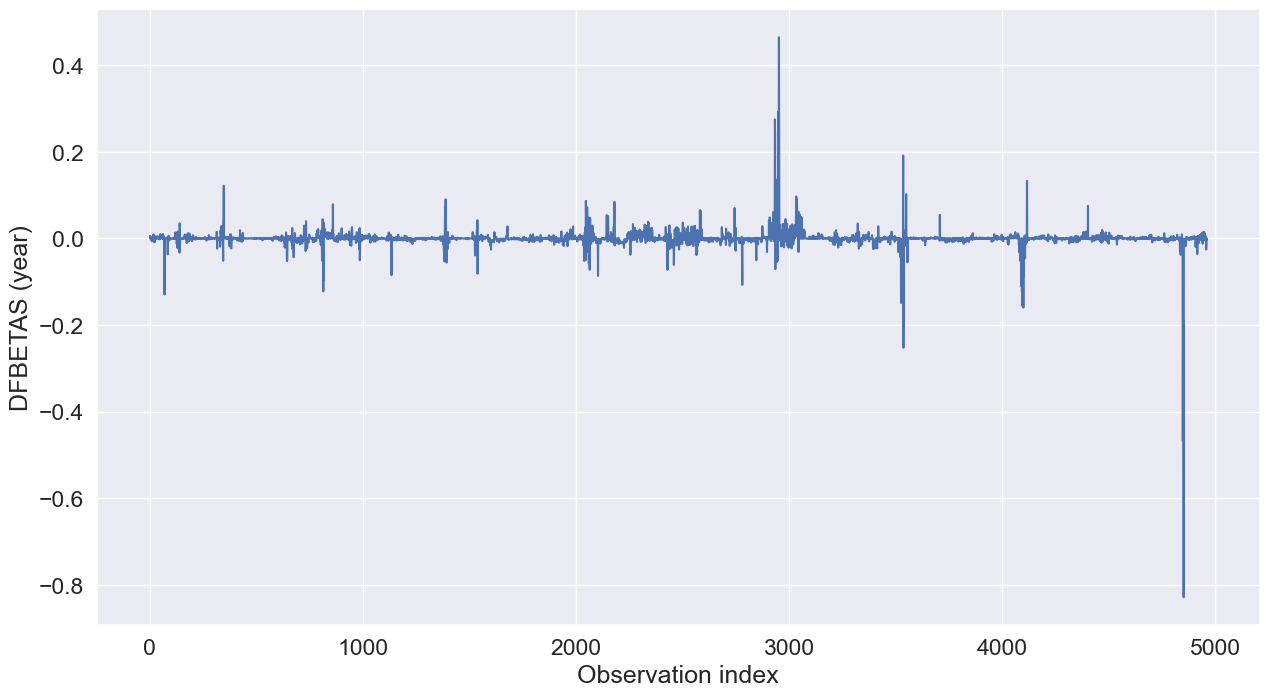

7.3.3 Influence on regression coefficients (DFBETAS)

\(DFBETAS\) measures the influence of the \(i^{th}\) observation on the regression coefficient.

\(DFBETAS\) of the \(i^{th}\) observation on the \(k^{th}\) regression coefficient is:

\[\begin{equation} (DFBETAS)_{k(i)} = \frac{\hat{\beta}_k-\hat{\beta}_{k(i)}}{\sqrt{MSE_ic_{k}}} \end{equation}\]

where \(c_k\) is the \(k^{th}\) diagonal element of \((X^TX)^{-1}\).

For large datasets, an observation is considered influential if \(DFBETAS\) exceeds \(\frac{2}{\sqrt{n}}\).

Below is the plot of \(DFBETAS\) for the year predictor against the observation index.

sns.set(font_scale =1.5)

sns.lineplot(x = range(train.shape[0]), y = influence.dfbetas[:,1])

plt.xlabel('Observation index')

plt.ylabel("DFBETAS (year)");

Let us analyze the point with the highest magnitude of \(DFBETAS\).

np.where(influence.dfbetas[:,1]<-0.8)(array([4851], dtype=int64),)train.year.describe()count 4960.000000

mean 2016.737903

std 2.884035

min 1997.000000

25% 2016.000000

50% 2017.000000

75% 2019.000000

max 2020.000000

Name: year, dtype: float64train.loc[train.year<=2002,:]| carID | brand | model | year | transmission | mileage | fuelType | tax | mpg | engineSize | price | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 330 | 13200 | audi | A8 | 1997 | Automatic | 122000 | Petrol | 265 | 19.3511 | 4.2 | 4650 |

| 732 | 13988 | vw | Beetle | 2001 | Manual | 47729 | Petrol | 330 | 32.5910 | 2.0 | 2490 |

| 3157 | 18794 | ford | Puma | 2002 | Manual | 108000 | Petrol | 230 | 38.5757 | 1.7 | 2195 |

| 3525 | 19395 | merc | S Class | 2001 | Automatic | 108800 | Diesel | 325 | 31.5473 | 3.2 | 1695 |

| 3532 | 17531 | merc | S Class | 1999 | Automatic | 34000 | Petrol | 145 | 24.8735 | 3.2 | 5995 |

| 3533 | 18761 | merc | S Class | 2001 | Automatic | 66000 | Petrol | 570 | 24.7744 | 3.2 | 4495 |

| 3535 | 18813 | merc | S Class | 1998 | Automatic | 43534 | Petrol | 265 | 23.2962 | 6.0 | 19990 |

| 3536 | 17891 | merc | S Class | 2002 | Automatic | 24000 | Petrol | 570 | 20.7968 | 5.0 | 6995 |

| 3707 | 18746 | hyundi | Santa Fe | 2002 | Manual | 94000 | Petrol | 325 | 30.2671 | 2.4 | 1200 |

| 4091 | 12995 | merc | SLK | 1998 | Automatic | 113557 | Petrol | 265 | 31.8368 | 2.3 | 1990 |

| 4094 | 19585 | merc | SLK | 2001 | Automatic | 69234 | Petrol | 325 | 30.8839 | 2.0 | 3990 |

| 4096 | 14265 | merc | SLK | 2001 | Automatic | 48172 | Petrol | 325 | 29.7058 | 2.3 | 3990 |

| 4097 | 15821 | merc | SLK | 2002 | Automatic | 61400 | Petrol | 325 | 29.6568 | 2.3 | 3990 |

| 4098 | 13021 | merc | SLK | 2001 | Automatic | 91000 | Petrol | 325 | 30.3248 | 2.3 | 3950 |

| 4099 | 12660 | merc | SLK | 2001 | Automatic | 42087 | Petrol | 325 | 29.9404 | 2.3 | 4490 |

| 4101 | 17521 | merc | SLK | 2002 | Automatic | 75034 | Petrol | 325 | 30.1380 | 2.3 | 4990 |

| 4107 | 13977 | merc | SLK | 2000 | Automatic | 87000 | Petrol | 265 | 27.2998 | 3.2 | 1490 |

| 4108 | 18679 | merc | SLK | 2000 | Automatic | 113237 | Petrol | 270 | 26.8765 | 3.2 | 3990 |

| 4109 | 14598 | merc | SLK | 2001 | Automatic | 64476 | Petrol | 325 | 27.4628 | 3.2 | 4990 |

| 4847 | 17268 | bmw | Z3 | 1997 | Manual | 49000 | Petrol | 270 | 34.9548 | 1.9 | 3950 |

| 4848 | 12137 | bmw | Z3 | 1999 | Manual | 58000 | Petrol | 270 | 35.3077 | 1.9 | 3950 |

| 4849 | 13288 | bmw | Z3 | 1999 | Manual | 74282 | Petrol | 245 | 35.4143 | 1.9 | 3995 |

| 4850 | 19172 | bmw | Z3 | 2001 | Manual | 60000 | Petrol | 325 | 30.7305 | 2.2 | 5950 |

| 4851 | 16577 | bmw | Z3 | 2002 | Automatic | 16500 | Petrol | 325 | 29.7614 | 2.2 | 14995 |

Let us see the leverage and studentized residual for this observation.

# Leverage

leverage[4851]0.047120455781282225# Studentized residual

out.student_resid[4851]4.938606329343604Do you see what makes this point influential?

7.4 Collinearity

Collinearity refers to the situation when two or more predictor variables have a high linear association. Linear association between a pair of variables can be measured by the correlation coefficient. Thus the correlation matrix can indicate some potential collinearity problems.

7.4.1 Why and how is collinearity a problem

(Source: page 100-101 of book)

The presence of collinearity can pose problems in the regression context, since it can be difficult to separate out the individual effects of collinear variables on the response.

Since collinearity reduces the accuracy of the estimates of the regression coefficients, it causes the standard error for \(\hat \beta_j\) to grow. Recall that the t-statistic for each predictor is calculated by dividing \(\hat \beta_j\) by its standard error. Consequently, collinearity results in a decline in the \(t\)-statistic. As a result, in the presence of collinearity, we may fail to reject \(H_0: \beta_j = 0\). This means that the power of the hypothesis test—the probability of correctly detecting a non-zero coefficient—is reduced by collinearity.

7.4.2 How to measure collinearity/multicollinearity

(Source: page 102 of book)

Unfortunately, not all collinearity problems can be detected by inspection of the correlation matrix: it is possible for collinearity to exist between three or more variables even if no pair of variables has a particularly high correlation. We call this situation multicollinearity. Instead of inspecting the correlation matrix, a better way to assess multicollinearity is to compute the variance inflation factor (VIF). The VIF is variance inflation factor the ratio of the variance of \(\hat \beta_j\) when fitting the full model divided by the variance of \(\hat \beta_j\) if fit on its own. The smallest possible value for VIF is 1, which indicates the complete absence of collinearity. Typically in practice there is a small amount of collinearity among the predictors. As a rule of thumb, a VIF value that exceeds 5 or 10 indicates a problematic amount of collinearity.

The estimated variance of the coefficient \(\beta_j\), of the \(j^{th}\) predictor \(X_j\), can be expressed as:

\[\hat{var}(\hat{\beta_j}) = \frac{(\hat{\sigma})^2}{(n-1)\hat{var}({X_j})}.\frac{1}{1-R^2_{X_j|X_{-j}}},\]

where \(R^2_{X_j|X_{-j}}\) is the \(R\)-squared for the regression of \(X_j\) on the other covariates (a regression that does not involve the response variable \(Y\)).

In case of simple linear regression, the variance expression in the equation above does not contain the term \(\frac{1}{1-R^2_{X_j|X_{-j}}}\), as there is only one predictor. However, in case of multiple linear regression, the variance of the estimate of the \(j^{th}\) coefficient (\(\hat{\beta_j}\)) gets inflated by a factor of \(\frac{1}{1-R^2_{X_j|X_{-j}}}\) (Note that in the complete absence of collinearity, \(R^2_{X_j|X_{-j}}=0\), and the value of this factor will be 1).

Thus, the Variance inflation factor, or the VIF for the estimated coefficient of the \(j^{th}\) predictor \(X_j\) is:

\[\begin{equation} VIF(\hat \beta_j) = \frac{1}{1-R^2_{X_j|X_{-j}}} \end{equation}\]

#Correlation matrix

train.corr()| carID | year | mileage | tax | mpg | engineSize | price | |

|---|---|---|---|---|---|---|---|

| carID | 1.000000 | 0.006251 | -0.001320 | 0.023806 | -0.010774 | 0.011365 | 0.012129 |

| year | 0.006251 | 1.000000 | -0.768058 | -0.205902 | -0.057093 | 0.014623 | 0.501296 |

| mileage | -0.001320 | -0.768058 | 1.000000 | 0.133744 | 0.125376 | -0.006459 | -0.478705 |

| tax | 0.023806 | -0.205902 | 0.133744 | 1.000000 | -0.488002 | 0.465282 | 0.144652 |

| mpg | -0.010774 | -0.057093 | 0.125376 | -0.488002 | 1.000000 | -0.419417 | -0.369919 |

| engineSize | 0.011365 | 0.014623 | -0.006459 | 0.465282 | -0.419417 | 1.000000 | 0.624899 |

| price | 0.012129 | 0.501296 | -0.478705 | 0.144652 | -0.369919 | 0.624899 | 1.000000 |

Let us compute the Variance Inflation Factor (VIF) for the four predictors.

X = train[['mpg','year','mileage','engineSize']]X.columns[1:]Index(['year', 'mileage', 'engineSize'], dtype='object')from statsmodels.stats.outliers_influence import variance_inflation_factor

from statsmodels.tools.tools import add_constant

X = add_constant(X)

vif_data = pd.DataFrame()

vif_data["feature"] = X.columns

for i in range(len(X.columns)):

vif_data.loc[i,'VIF'] = variance_inflation_factor(X.values, i)

print(vif_data) feature VIF

0 const 1.201579e+06

1 mpg 1.243040e+00

2 year 2.452891e+00

3 mileage 2.490210e+00

4 engineSize 1.219170e+00As all the values of VIF are close to one, we do not have the problem of multicollinearity in the model. Note that the VIF of year and mileage is relatively high as they are the most correlated.

Q1: Why is the VIF of the constant so high?

Q2: Why do we need to include the constant while finding the VIF?

7.4.3 Manual computation of VIF

#Manually computing the VIF for year

ols_object = smf.ols(formula = 'price~mpg', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | price | R-squared: | 0.137 |

| Model: | OLS | Adj. R-squared: | 0.137 |

| Method: | Least Squares | F-statistic: | 786.0 |

| Date: | Wed, 06 Mar 2024 | Prob (F-statistic): | 1.14e-160 |

| Time: | 17:04:39 | Log-Likelihood: | -54812. |

| No. Observations: | 4960 | AIC: | 1.096e+05 |

| Df Residuals: | 4958 | BIC: | 1.096e+05 |

| Df Model: | 1 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | 4.144e+04 | 676.445 | 61.258 | 0.000 | 4.01e+04 | 4.28e+04 |

| mpg | -374.2975 | 13.351 | -28.036 | 0.000 | -400.471 | -348.124 |

| Omnibus: | 2132.208 | Durbin-Watson: | 0.320 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 13751.995 |

| Skew: | 1.942 | Prob(JB): | 0.00 |

| Kurtosis: | 10.174 | Cond. No. | 158. |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

(13.351/9.338)**22.044183378279958#Manually computing the VIF for year

ols_object = smf.ols(formula = 'price~year+mpg+engineSize+mileage', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | price | R-squared: | 0.660 |

| Model: | OLS | Adj. R-squared: | 0.660 |

| Method: | Least Squares | F-statistic: | 2410. |

| Date: | Wed, 06 Mar 2024 | Prob (F-statistic): | 0.00 |

| Time: | 17:01:18 | Log-Likelihood: | -52497. |

| No. Observations: | 4960 | AIC: | 1.050e+05 |

| Df Residuals: | 4955 | BIC: | 1.050e+05 |

| Df Model: | 4 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | -3.661e+06 | 1.49e+05 | -24.593 | 0.000 | -3.95e+06 | -3.37e+06 |

| year | 1817.7366 | 73.751 | 24.647 | 0.000 | 1673.151 | 1962.322 |

| mpg | -79.3126 | 9.338 | -8.493 | 0.000 | -97.620 | -61.006 |

| engineSize | 1.218e+04 | 189.969 | 64.107 | 0.000 | 1.18e+04 | 1.26e+04 |

| mileage | -0.1474 | 0.009 | -16.817 | 0.000 | -0.165 | -0.130 |

| Omnibus: | 2450.973 | Durbin-Watson: | 0.541 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 31060.548 |

| Skew: | 2.045 | Prob(JB): | 0.00 |

| Kurtosis: | 14.557 | Cond. No. | 3.83e+07 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 3.83e+07. This might indicate that there are

strong multicollinearity or other numerical problems.

#Manually computing the VIF for year

ols_object = smf.ols(formula = 'year~mpg+engineSize+mileage', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | year | R-squared: | 0.592 |

| Model: | OLS | Adj. R-squared: | 0.592 |

| Method: | Least Squares | F-statistic: | 2400. |

| Date: | Wed, 06 Mar 2024 | Prob (F-statistic): | 0.00 |

| Time: | 17:00:13 | Log-Likelihood: | -10066. |

| No. Observations: | 4960 | AIC: | 2.014e+04 |

| Df Residuals: | 4956 | BIC: | 2.017e+04 |

| Df Model: | 3 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | 2018.3135 | 0.140 | 1.44e+04 | 0.000 | 2018.039 | 2018.588 |

| mpg | 0.0095 | 0.002 | 5.301 | 0.000 | 0.006 | 0.013 |

| engineSize | 0.1171 | 0.037 | 3.203 | 0.001 | 0.045 | 0.189 |

| mileage | -9.139e-05 | 1.08e-06 | -84.615 | 0.000 | -9.35e-05 | -8.93e-05 |

| Omnibus: | 2949.664 | Durbin-Watson: | 1.161 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 63773.271 |

| Skew: | -2.426 | Prob(JB): | 0.00 |

| Kurtosis: | 19.883 | Cond. No. | 1.91e+05 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.91e+05. This might indicate that there are

strong multicollinearity or other numerical problems.

#VIF for year

1/(1-0.592)2.4509803921568625Note that year and mileage have a high linear correlation. Removing one of them should decrease the standard error of the coefficient of the other, without significanty decrease R-squared.

ols_object = smf.ols(formula = 'price~mpg+engineSize+mileage+year', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | price | R-squared: | 0.660 |

| Model: | OLS | Adj. R-squared: | 0.660 |

| Method: | Least Squares | F-statistic: | 2410. |

| Date: | Tue, 07 Feb 2023 | Prob (F-statistic): | 0.00 |

| Time: | 21:39:45 | Log-Likelihood: | -52497. |

| No. Observations: | 4960 | AIC: | 1.050e+05 |

| Df Residuals: | 4955 | BIC: | 1.050e+05 |

| Df Model: | 4 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | -3.661e+06 | 1.49e+05 | -24.593 | 0.000 | -3.95e+06 | -3.37e+06 |

| mpg | -79.3126 | 9.338 | -8.493 | 0.000 | -97.620 | -61.006 |

| engineSize | 1.218e+04 | 189.969 | 64.107 | 0.000 | 1.18e+04 | 1.26e+04 |

| mileage | -0.1474 | 0.009 | -16.817 | 0.000 | -0.165 | -0.130 |

| year | 1817.7366 | 73.751 | 24.647 | 0.000 | 1673.151 | 1962.322 |

| Omnibus: | 2450.973 | Durbin-Watson: | 0.541 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 31060.548 |

| Skew: | 2.045 | Prob(JB): | 0.00 |

| Kurtosis: | 14.557 | Cond. No. | 3.83e+07 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 3.83e+07. This might indicate that there are

strong multicollinearity or other numerical problems.

Removing mileage from the above regression.

ols_object = smf.ols(formula = 'price~mpg+engineSize+year', data = train)

model_log = ols_object.fit()

model_log.summary()| Dep. Variable: | price | R-squared: | 0.641 |

| Model: | OLS | Adj. R-squared: | 0.641 |

| Method: | Least Squares | F-statistic: | 2951. |

| Date: | Tue, 07 Feb 2023 | Prob (F-statistic): | 0.00 |

| Time: | 21:40:00 | Log-Likelihood: | -52635. |

| No. Observations: | 4960 | AIC: | 1.053e+05 |

| Df Residuals: | 4956 | BIC: | 1.053e+05 |

| Df Model: | 3 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

| Intercept | -5.586e+06 | 9.78e+04 | -57.098 | 0.000 | -5.78e+06 | -5.39e+06 |

| mpg | -101.9120 | 9.500 | -10.727 | 0.000 | -120.536 | -83.288 |

| engineSize | 1.196e+04 | 194.848 | 61.392 | 0.000 | 1.16e+04 | 1.23e+04 |

| year | 2771.1844 | 48.492 | 57.147 | 0.000 | 2676.118 | 2866.251 |

| Omnibus: | 2389.075 | Durbin-Watson: | 0.528 |

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 26920.051 |

| Skew: | 2.018 | Prob(JB): | 0.00 |

| Kurtosis: | 13.675 | Cond. No. | 1.41e+06 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.41e+06. This might indicate that there are

strong multicollinearity or other numerical problems.

Note that the standard error of the coefficient of year has reduced from 73 to 48, without any large reduction in R-squared.

7.4.4 When can we overlook multicollinearity?

The severity of the problems increases with the degree of the multicollinearity. Therefore, if there is only moderate multicollinearity (5 < VIF < 10), we may overlook it.

Multicollinearity affects only the standard errors of the coefficients of collinear predictors. Therefore, if multicollinearity is not present for the predictors that we are particularly interested in, we may not need to resolve it.

Multicollinearity affects the standard error of the coefficients and thereby their \(p\)-values, but in general, it does not influence the prediction accuracy, except in the case that the coefficients are so unstable that the predictions are outside of the domain space of the response. If our sole aim is prediction, and we don’t wish to infer the statistical significance of predictors, then we may avoid addressing multicollinearity. “The fact that some or all predictor variables are correlated among themselves does not, in general, inhibit our ability to obtain a good fit nor does it tend to affect inferences about mean responses or predictions of new observations, provided these inferences are made within the region of observations” - Neter, John, Michael H. Kutner, Christopher J. Nachtsheim, and William Wasserman. “Applied linear statistical models.” (1996): 318.