import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import cross_val_score,train_test_split, KFold, cross_val_predict

from sklearn.metrics import mean_squared_error,r2_score,roc_curve,auc,precision_recall_curve, accuracy_score, \

recall_score, precision_score, confusion_matrix

from sklearn.tree import DecisionTreeRegressor,DecisionTreeClassifier

from sklearn.model_selection import GridSearchCV, ParameterGrid, StratifiedKFold, RandomizedSearchCV

from sklearn.ensemble import VotingRegressor, VotingClassifier, StackingRegressor, StackingClassifier, GradientBoostingRegressor,GradientBoostingClassifier, BaggingRegressor,BaggingClassifier,RandomForestRegressor,RandomForestClassifier,AdaBoostRegressor,AdaBoostClassifier

from sklearn.linear_model import LinearRegression,LogisticRegression, LassoCV, RidgeCV, ElasticNetCV

from sklearn.neighbors import KNeighborsRegressor

import itertools as it

import time as time

import xgboost as xgb

from skopt import BayesSearchCV

from skopt.space import Real, Categorical, Integer

from skopt.plots import plot_objective, plot_histogram, plot_convergence

import warnings

from IPython import display12 XGBoost

XGBoost is a very recently developed algorithm (2016). Thus, it’s not yet there in standard textbooks. Here are some resources for it.

12.1 Hyperparameters

The following are some of the important hyperparameters to tune in XGBoost:

Number of trees (

n_estimators)Depth of each tree (

max_depth)Learning rate (

learning_rate)Sampling observations / predictors (

subsamplefor observations,colsample_bytreefor predictors)Regularization parameters (

reg_lambda&gamma)

However, there are other hyperparameters that can be tuned as well. Check out the list of all hyperparameters in the XGBoost documentation.

#Using the same datasets as used for linear regression in STAT303-2,

#so that we can compare the non-linear models with linear regression

trainf = pd.read_csv('./Datasets/Car_features_train.csv')

trainp = pd.read_csv('./Datasets/Car_prices_train.csv')

testf = pd.read_csv('./Datasets/Car_features_test.csv')

testp = pd.read_csv('./Datasets/Car_prices_test.csv')

train = pd.merge(trainf,trainp)

test = pd.merge(testf,testp)

train.head()| carID | brand | model | year | transmission | mileage | fuelType | tax | mpg | engineSize | price | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 18473 | bmw | 6 Series | 2020 | Semi-Auto | 11 | Diesel | 145 | 53.3282 | 3.0 | 37980 |

| 1 | 15064 | bmw | 6 Series | 2019 | Semi-Auto | 10813 | Diesel | 145 | 53.0430 | 3.0 | 33980 |

| 2 | 18268 | bmw | 6 Series | 2020 | Semi-Auto | 6 | Diesel | 145 | 53.4379 | 3.0 | 36850 |

| 3 | 18480 | bmw | 6 Series | 2017 | Semi-Auto | 18895 | Diesel | 145 | 51.5140 | 3.0 | 25998 |

| 4 | 18492 | bmw | 6 Series | 2015 | Automatic | 62953 | Diesel | 160 | 51.4903 | 3.0 | 18990 |

X = train[['mileage','mpg','year','engineSize']]

Xtest = test[['mileage','mpg','year','engineSize']]

y = train['price']

ytest = test['price']12.2 XGBoost for regression

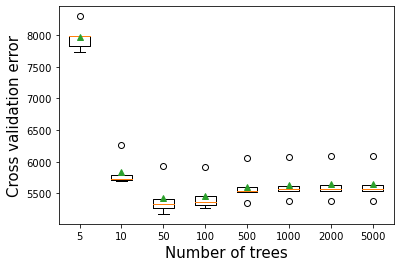

12.2.1 Number of trees vs cross validation error

As the number of trees increase, the prediction bias will decrease. Like gradient boosting is relatively robust (as compared to AdaBoost) to over-fitting (why?) so a large number usually results in better performance. Note that the number of trees still need to be tuned for optimal performance.

def get_models():

models = dict()

# define number of trees to consider

n_trees = [5, 10, 50, 100, 500, 1000, 2000, 5000]

for n in n_trees:

models[str(n)] = xgb.XGBRegressor(n_estimators=n,random_state=1)

return models

# evaluate a given model using cross-validation

def evaluate_model(model, X, y):

# define the evaluation procedure

cv = KFold(n_splits=5, shuffle=True, random_state=1)

# evaluate the model and collect the results

scores = np.sqrt(-cross_val_score(model, X, y, scoring='neg_mean_squared_error', cv=cv, n_jobs=-1))

return scores

# get the models to evaluate

models = get_models()

# evaluate the models and store results

results, names = list(), list()

for name, model in models.items():

# evaluate the model

scores = evaluate_model(model, X, y)

# store the results

results.append(scores)

names.append(name)

# summarize the performance along the way

print('>%s %.3f (%.3f)' % (name, np.mean(scores), np.std(scores)))

# plot model performance for comparison

plt.boxplot(results, labels=names, showmeans=True)

plt.ylabel('Cross validation error',fontsize=15)

plt.xlabel('Number of trees',fontsize=15)>5 7961.485 (192.906)

>10 5837.134 (217.986)

>50 5424.788 (263.890)

>100 5465.396 (237.938)

>500 5608.350 (235.903)

>1000 5635.159 (236.664)

>2000 5642.669 (236.192)

>5000 5643.411 (236.074)Text(0.5, 0, 'Number of trees')

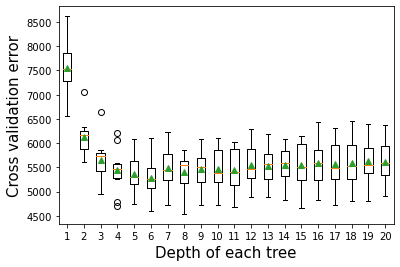

12.2.2 Depth of tree vs cross validation error

As the depth of each weak learner (decision tree) increases, the complexity of the weak learner will increase. As the complexity increases, the prediction bias will decrease, while the prediction variance will increase. Thus, there will be an optimal depth of each weak learner that minimizes the prediction error.

# get a list of models to evaluate

def get_models():

models = dict()

# explore depths from 1 to 10

for i in range(1,21):

# define ensemble model

models[str(i)] = xgb.XGBRegressor(random_state=1,max_depth=i)

return models

# evaluate a given model using cross-validation

def evaluate_model(model, X, y):

# define the evaluation procedure

cv = KFold(n_splits=10, shuffle=True, random_state=1)

# evaluate the model and collect the results

scores = np.sqrt(-cross_val_score(model, X, y, scoring='neg_mean_squared_error', cv=cv, n_jobs=-1))

return scores

# get the models to evaluate

models = get_models()

# evaluate the models and store results

results, names = list(), list()

for name, model in models.items():

# evaluate the model

scores = evaluate_model(model, X, y)

# store the results

results.append(scores)

names.append(name)

# summarize the performance along the way

print('>%s %.3f (%.3f)' % (name, np.mean(scores), np.std(scores)))

plt.boxplot(results, labels=names, showmeans=True)

plt.ylabel('Cross validation error',fontsize=15)

plt.xlabel('Depth of each tree',fontsize=15)>1 7541.827 (545.951)

>2 6129.425 (393.357)

>3 5647.783 (454.318)

>4 5438.481 (453.726)

>5 5358.074 (379.431)

>6 5281.675 (383.848)

>7 5495.163 (459.356)

>8 5399.145 (380.437)

>9 5469.563 (384.004)

>10 5461.549 (416.630)

>11 5443.210 (432.863)

>12 5546.447 (412.097)

>13 5532.414 (369.131)

>14 5556.761 (362.746)

>15 5540.366 (452.612)

>16 5586.004 (451.199)

>17 5563.137 (464.344)

>18 5594.919 (480.221)

>19 5641.226 (451.713)

>20 5616.462 (417.405)Text(0.5, 0, 'Depth of each tree')

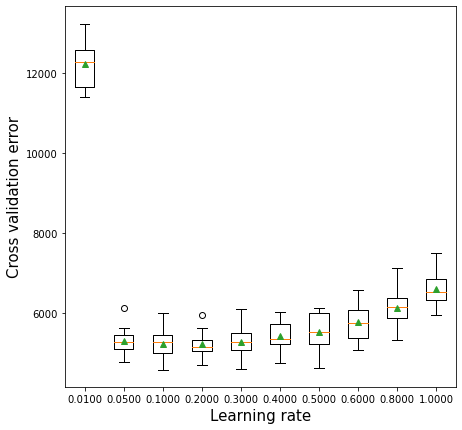

12.2.3 Learning rate vs cross validation error

The optimal learning rate will depend on the number of trees, and vice-versa. If the learning rate is too low, it will take several trees to “learn” the response. If the learning rate is high, the response will be “learned” quickly (with fewer) trees. Learning too quickly will be prone to overfitting, while learning too slowly will be computationally expensive. Thus, there will be an optimal learning rate to minimize the prediction error.

def get_models():

models = dict()

# explore learning rates from 0.1 to 2 in 0.1 increments

for i in [0.01,0.05,0.1,0.2,0.3,0.4,0.5,0.6,0.8,1.0]:

key = '%.4f' % i

models[key] = xgb.XGBRegressor(learning_rate=i,random_state=1)

return models

# evaluate a given model using cross-validation

def evaluate_model(model, X, y):

# define the evaluation procedure

cv = KFold(n_splits=10, shuffle=True, random_state=1)

# evaluate the model and collect the results

scores = np.sqrt(-cross_val_score(model, X, y, scoring='neg_mean_squared_error', cv=cv, n_jobs=-1))

return scores

# get the models to evaluate

models = get_models()

# evaluate the models and store results

results, names = list(), list()

for name, model in models.items():

# evaluate the model

scores = evaluate_model(model, X, y)

# store the results

results.append(scores)

names.append(name)

# summarize the performance along the way

print('>%s %.1f (%.1f)' % (name, np.mean(scores), np.std(scores)))

# plot model performance for comparison

plt.figure(figsize=(7, 7))

plt.boxplot(results, labels=names, showmeans=True)

plt.ylabel('Cross validation error',fontsize=15)

plt.xlabel('Learning rate',fontsize=15)>0.0100 12223.8 (636.7)

>0.0500 5298.5 (383.5)

>0.1000 5236.3 (397.5)

>0.2000 5221.5 (347.5)

>0.3000 5281.7 (383.8)

>0.4000 5434.1 (364.6)

>0.5000 5537.0 (471.9)

>0.6000 5767.4 (478.5)

>0.8000 6132.7 (472.5)

>1.0000 6593.6 (408.9)Text(0.5, 0, 'Learning rate')

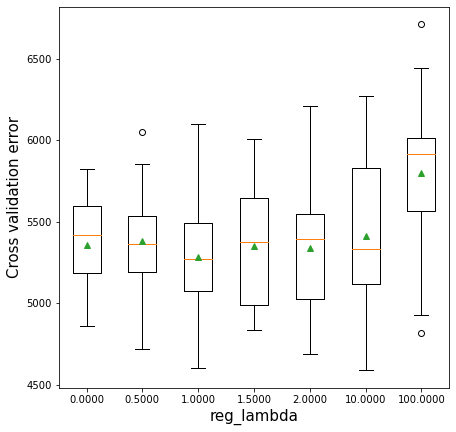

12.2.4 Regularization (reg_lambda) vs cross validation error

The parameter reg_lambda penalizes the L2 norm of the leaf scores. For example, in case of classification, it will penalize the summation of the square of log odds of the predicted probability. This penalization will tend to reduce the log odds, thereby reducing the tendency to overfit. “Reducing the log odds” in layman terms will mean not being overly sure about the prediction.

Without regularization, the algorithm will be closer to the gradient boosting algorithm. Regularization may provide some additional boost to prediction accuracy by reducing over-fitting. In the example below, regularization with reg_lambda=1 turns out to be better than no regularization (reg_lambda=0)*. Of course, too much regularization may increase bias so much such that it leads to a decrease in prediction accuracy.

def get_models():

models = dict()

# explore 'reg_lambda' from 0.1 to 2 in 0.1 increments

for i in [0,0.5,1.0,1.5,2,10,100]:

key = '%.4f' % i

models[key] = xgb.XGBRegressor(reg_lambda=i,random_state=1)

return models

# evaluate a given model using cross-validation

def evaluate_model(model, X, y):

# define the evaluation procedure

cv = KFold(n_splits=10, shuffle=True, random_state=1)

# evaluate the model and collect the results

scores = np.sqrt(-cross_val_score(model, X, y, scoring='neg_mean_squared_error', cv=cv, n_jobs=-1))

return scores

# get the models to evaluate

models = get_models()

# evaluate the models and store results

results, names = list(), list()

for name, model in models.items():

# evaluate the model

scores = evaluate_model(model, X, y)

# store the results

results.append(scores)

names.append(name)

# summarize the performance along the way

print('>%s %.1f (%.1f)' % (name, np.mean(scores), np.std(scores)))

# plot model performance for comparison

plt.figure(figsize=(7, 7))

plt.boxplot(results, labels=names, showmeans=True)

plt.ylabel('Cross validation error',fontsize=15)

plt.xlabel('reg_lambda',fontsize=15)>0.0000 5359.2 (317.0)

>0.5000 5382.7 (363.1)

>1.0000 5281.7 (383.8)

>1.5000 5348.0 (383.9)

>2.0000 5336.4 (426.6)

>10.0000 5410.9 (521.9)

>100.0000 5801.1 (563.7)Text(0.5, 0, 'reg_lambda')

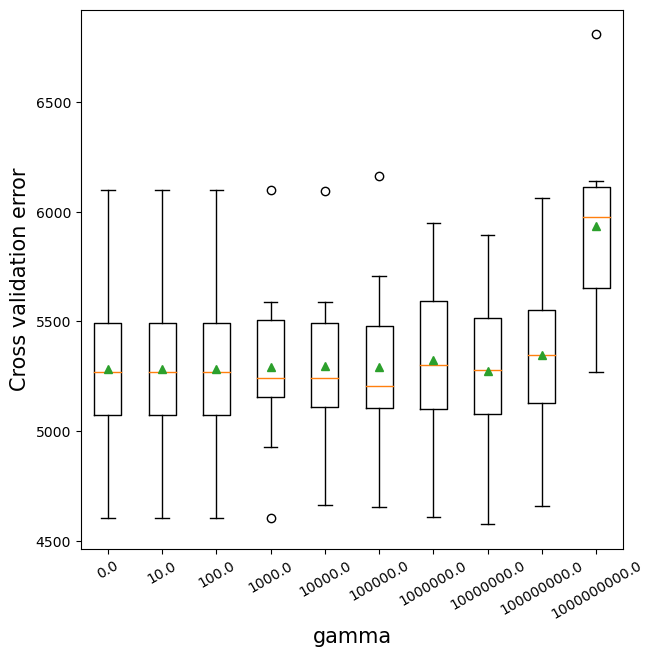

12.2.5 Regularization (gamma) vs cross validation error

The parameter gamma penalizes the tree based on the number of leaves. This is similar to the parameter alpha of cost complexity pruning. As gamma increases, more leaves will be pruned. Note that the previous parameter reg_lambda penalizes the leaf score, but does not prune the tree.

Without regularization, the algorithm will be closer to the gradient boosting algorithm. Regularization may provide some additional boost to prediction accuracy by reducing over-fitting. However, in the example below, no regularization (in terms of gamma=0) turns out to be better than a non-zero regularization. (reg_lambda=0).

def get_models():

models = dict()

# explore gamma from 0.1 to 2 in 0.1 increments

for i in [0,10,1e2,1e3,1e4,1e5,1e6,1e7,1e8,1e9]:

key = '%.4f' % i

models[key] = xgb.XGBRegressor(gamma=i,random_state=1)

return models

# evaluate a given model using cross-validation

def evaluate_model(model, X, y):

# define the evaluation procedure

cv = KFold(n_splits=10, shuffle=True, random_state=1)

# evaluate the model and collect the results

scores = np.sqrt(-cross_val_score(model, X, y, scoring='neg_mean_squared_error', cv=cv, n_jobs=-1))

return scores

# get the models to evaluate

models = get_models()

# evaluate the models and store results

results, names = list(), list()

for name, model in models.items():

# evaluate the model

scores = evaluate_model(model, X, y)

# store the results

results.append(scores)

names.append(name)

# summarize the performance along the way

print('>%s %.1f (%.1f)' % (name, np.mean(scores), np.std(scores)))

# plot model performance for comparison

plt.figure(figsize=(7, 7))

plt.boxplot(results, labels=names, showmeans=True)

plt.ylabel('Cross validation error',fontsize=15)

plt.xlabel('gamma',fontsize=15)

#ax.set_xticklabels(x.astype(int))

plt.xticks(ticks=plt.xticks()[0].astype(int), labels=np.round([0,10,1e2,1e3,1e4,1e5,1e6,1e7,1e8,1e9]),

rotation = 30);>0.0000 5281.7 (383.8)

>10.0000 5281.7 (383.8)

>100.0000 5281.7 (383.8)

>1000.0000 5291.8 (381.8)

>10000.0000 5295.7 (370.2)

>100000.0000 5293.0 (402.5)

>1000000.0000 5322.2 (368.9)

>10000000.0000 5273.7 (409.8)

>100000000.0000 5345.4 (373.9)

>1000000000.0000 5932.3 (397.6)

12.2.6 Tuning XGboost regressor

Along with max_depth, learning_rate, and n_estimators, here we tune reg_lambda - the regularization parameter for penalizing the tree predictions.

#K-fold cross validation to find optimal parameters for XGBoost

start_time = time.time()

param_grid = {'max_depth': [4,6,8],

'learning_rate': [0.01, 0.05, 0.1],

'reg_lambda':[0, 1, 10],

'n_estimators':[100, 500, 1000],

'gamma': [0, 10, 100],

'subsample': [0.5, 0.75, 1.0],

'colsample_bytree': [0.5, 0.75, 1.0]}

cv = KFold(n_splits=5,shuffle=True,random_state=1)

optimal_params = RandomizedSearchCV(estimator=xgb.XGBRegressor(random_state=1),

param_distributions = param_grid, n_iter = 200,

verbose = 1,

n_jobs=-1,

cv = cv)

optimal_params.fit(X,y)

print("Optimal parameter values =", optimal_params.best_params_)

print("Optimal cross validation R-squared = ",optimal_params.best_score_)

print("Time taken = ", round((time.time()-start_time)/60), " minutes")Fitting 5 folds for each of 200 candidates, totalling 1000 fits

Optimal parameter values = {'subsample': 0.75, 'reg_lambda': 1, 'n_estimators': 1000, 'max_depth': 8, 'learning_rate': 0.01, 'gamma': 100, 'colsample_bytree': 1.0}

Optimal cross validation R-squared = 0.9002580404500382

Time taken = 4 minutes#RMSE based on the optimal parameter values

np.sqrt(mean_squared_error(optimal_params.best_estimator_.predict(Xtest),ytest))5497.553788113875Let us use Bayes search to tune the model.

model = xgb.XGBRegressor(random_state = 1)

grid = {'max_leaves': Integer(4, 5000),

'learning_rate': Real(0.0001, 1.0),

'reg_lambda':Real(0, 1e4),

'n_estimators':Integer(2, 2000),

'gamma': Real(0, 1e11),

'subsample': Real(0.1,1.0),

'colsample_bytree': Real(0.1, 1.0)}

kfold = KFold(n_splits = 5, shuffle = True, random_state = 1)

gcv = BayesSearchCV(model, search_spaces = grid, cv = kfold, n_iter = 100, random_state = 1,

scoring = 'neg_root_mean_squared_error', n_jobs = -1)

paras = list(gcv.search_spaces.keys())

paras.sort()

def monitor(optim_result):

cv_values = pd.Series(optim_result['func_vals']).cummin()

display.clear_output(wait = True)

min_ind = pd.Series(optim_result['func_vals']).argmin()

print(paras, "=", optim_result['x_iters'][min_ind], pd.Series(optim_result['func_vals']).min())

sns.lineplot(cv_values)

plt.show()

gcv.fit(X, y, callback = monitor)['colsample_bytree', 'gamma', 'learning_rate', 'max_leaves', 'n_estimators', 'reg_lambda', 'subsample'] = [0.8455872906441244, 0.0, 0.9137620583590551, 802, 1023, 1394.0140479620452, 0.7987263539365876] 5340.272253124142

BayesSearchCV(cv=KFold(n_splits=5, random_state=1, shuffle=True),

estimator=XGBRegressor(base_score=None, booster=None,

callbacks=None, colsample_bylevel=None,

colsample_bynode=None,

colsample_bytree=None,

early_stopping_rounds=None,

enable_categorical=False, eval_metric=None,

feature_types=None, gamma=None,

gpu_id=None, grow_policy=None,

importance_type=None,

inte...

'learning_rate': Real(low=0.0001, high=1.0, prior='uniform', transform='normalize'),

'max_leaves': Integer(low=4, high=5000, prior='uniform', transform='normalize'),

'n_estimators': Integer(low=2, high=2000, prior='uniform', transform='normalize'),

'reg_lambda': Real(low=0, high=10000.0, prior='uniform', transform='normalize'),

'subsample': Real(low=0.1, high=1.0, prior='uniform', transform='normalize')})In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

BayesSearchCV(cv=KFold(n_splits=5, random_state=1, shuffle=True),

estimator=XGBRegressor(base_score=None, booster=None,

callbacks=None, colsample_bylevel=None,

colsample_bynode=None,

colsample_bytree=None,

early_stopping_rounds=None,

enable_categorical=False, eval_metric=None,

feature_types=None, gamma=None,

gpu_id=None, grow_policy=None,

importance_type=None,

inte...

'learning_rate': Real(low=0.0001, high=1.0, prior='uniform', transform='normalize'),

'max_leaves': Integer(low=4, high=5000, prior='uniform', transform='normalize'),

'n_estimators': Integer(low=2, high=2000, prior='uniform', transform='normalize'),

'reg_lambda': Real(low=0, high=10000.0, prior='uniform', transform='normalize'),

'subsample': Real(low=0.1, high=1.0, prior='uniform', transform='normalize')})XGBRegressor(base_score=None, booster=None, callbacks=None,

colsample_bylevel=None, colsample_bynode=None,

colsample_bytree=None, early_stopping_rounds=None,

enable_categorical=False, eval_metric=None, feature_types=None,

gamma=None, gpu_id=None, grow_policy=None, importance_type=None,

interaction_constraints=None, learning_rate=None, max_bin=None,

max_cat_threshold=None, max_cat_to_onehot=None,

max_delta_step=None, max_depth=None, max_leaves=None,

min_child_weight=None, missing=nan, monotone_constraints=None,

n_estimators=100, n_jobs=None, num_parallel_tree=None,

predictor=None, random_state=1, ...)XGBRegressor(base_score=None, booster=None, callbacks=None,

colsample_bylevel=None, colsample_bynode=None,

colsample_bytree=None, early_stopping_rounds=None,

enable_categorical=False, eval_metric=None, feature_types=None,

gamma=None, gpu_id=None, grow_policy=None, importance_type=None,

interaction_constraints=None, learning_rate=None, max_bin=None,

max_cat_threshold=None, max_cat_to_onehot=None,

max_delta_step=None, max_depth=None, max_leaves=None,

min_child_weight=None, missing=nan, monotone_constraints=None,

n_estimators=100, n_jobs=None, num_parallel_tree=None,

predictor=None, random_state=1, ...)model1 = xgb.XGBRegressor(random_state = 1, colsample_bytree = 0.85, gamma = 0, learning_rate = 0.91,

max_leaves = 802, n_estimators = 1023, reg_lambda = 1394, subsample = 0.8).fit(X, y)np.sqrt(mean_squared_error(model1.predict(Xtest),ytest))5466.076861800755We got a different set of optimal hyperparameters with Bayes search. Thus, ensembling the model based on the two sets of hyperparameters is likely to improve the accuracy over the individual models.

model2 = xgb.XGBRegressor(random_state = 1, colsample_bytree = 1.0, gamma = 100, learning_rate = 0.01,

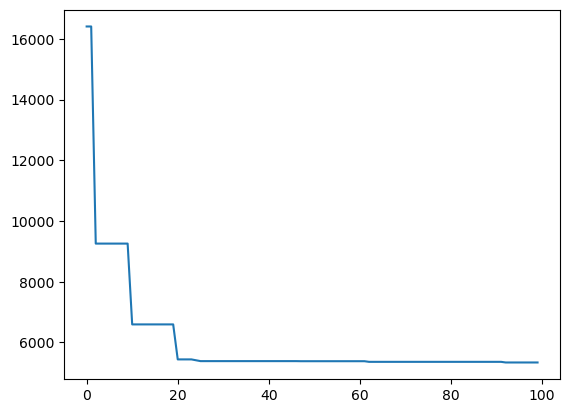

max_depth = 8, n_estimators = 1000, reg_lambda = 1, subsample = 0.75).fit(X, y)np.sqrt(mean_squared_error(0.5*model1.predict(Xtest)+0.5*model2.predict(Xtest),ytest))5393.37983422684512.2.7 Early stopping with XGBoost

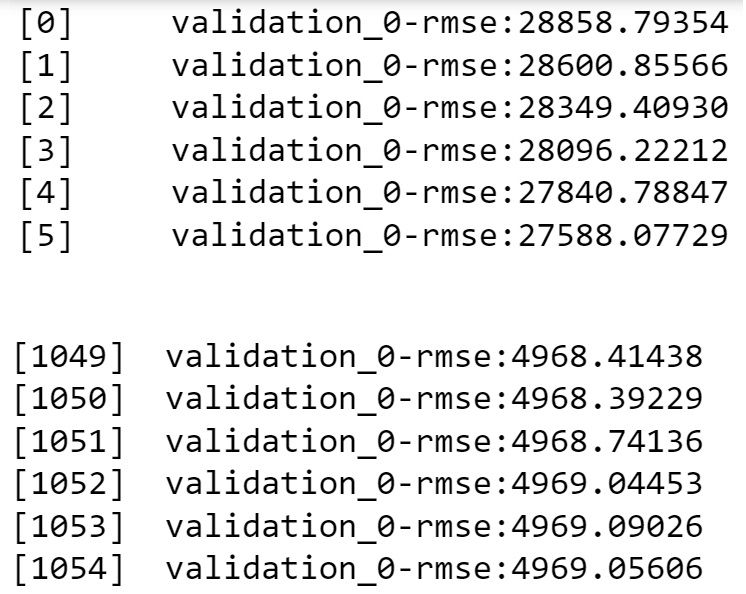

If we have a test dataset (or we can further split the train data into a smaller train and test data), we can use it with the early_stopping_rounds argument of XGBoost, where it will stop growing trees once the model accuracy fails to increase for a certain number of consecutive iterations, given as early_stopping_rounds.

X_train_sub, X_test_sub, y_train_sub, y_test_sub = \

train_test_split(X, y, test_size = 0.2, random_state = 45)model = xgb.XGBRegressor(random_state = 1, max_depth = 8, learning_rate = 0.01,

n_estimators = 20000,reg_lambda = 1, gamma = 100, subsample = 0.75, colsample_bytree = 1.0)

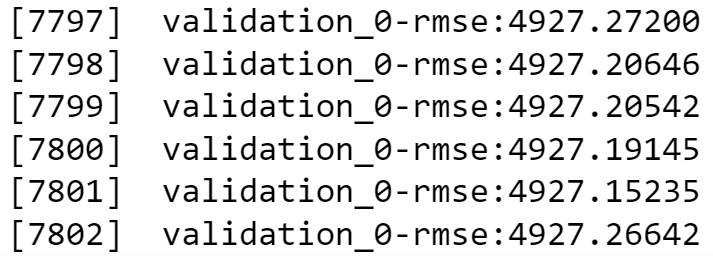

model.fit(X_train_sub, y_train_sub, eval_set = ([(X_test_sub, y_test_sub)]), early_stopping_rounds = 250)The results of the code are truncated to save space. A snapshot of the beginning and end of the results is below. The algorithm keeps adding trees to the model until the RMSE ceases to decrease for 250 consecutive iterations.

print("XGBoost RMSE = ",np.sqrt(mean_squared_error(model.predict(Xtest),ytest)))XGBoost RMSE = 5508.787454011525Let us further reduce the learning rate to 0.001 and see if the accuracy increases further on the test data. We’ll use the early_stopping_rounds argument to stop growing trees once the accuracy fails to increase for 250 consecutive iterations.

model = xgb.XGBRegressor(random_state = 1, max_depth = 8, learning_rate = 0.001,

n_estimators = 20000,reg_lambda = 1, gamma = 100, subsample = 0.75, colsample_bytree = 1.0)

model.fit(X_train_sub, y_train_sub, eval_set = ([(X_test_sub, y_test_sub)]), early_stopping_rounds = 250)

print("XGBoost RMSE = ",np.sqrt(mean_squared_error(model.predict(Xtest),ytest)))XGBoost RMSE = 5483.518711988693Note that the accuracy on this test data has further increased with a lower learning rate.

Let us combine the XGBoost model with other tuned models from earlier chapters.

#Tuned AdaBoost model from Section 7.2.4

model_ada = AdaBoostRegressor(base_estimator=DecisionTreeRegressor(max_depth=10),n_estimators=50,learning_rate=1.0,

random_state=1).fit(X,y)

print("AdaBoost RMSE = ", np.sqrt(mean_squared_error(model_ada.predict(Xtest),ytest)))

#Tuned Random forest model from Section 6.1.2

model_rf = RandomForestRegressor(n_estimators=300, random_state=1,

n_jobs=-1, max_features=2).fit(X, y)

print("Random Forest RMSE = ",np.sqrt(mean_squared_error(model_rf.predict(Xtest),ytest)))

#Tuned gradient boosting model from Section 8.2.5

model_gb = GradientBoostingRegressor(max_depth=8,n_estimators=100,learning_rate=0.1,

random_state=1,loss='huber').fit(X,y)

print("Gradient boost RMSE = ",np.sqrt(mean_squared_error(model_gb.predict(Xtest),ytest)))AdaBoost RMSE = 5693.165811600585

Random Forest RMSE = 5642.45839697972

Gradient boost RMSE = 5405.787029062213#Ensemble model

pred_xgb = model.predict(Xtest) #XGBoost

pred_ada = model_ada.predict(Xtest)#AdaBoost

pred_rf = model_rf.predict(Xtest) #Random Forest

pred_gb = model_gb.predict(Xtest) #Gradient boost

pred = 0.25*pred_xgb + 0.25*pred_ada + 0.25*pred_rf + 0.25*pred_gb #Option 1 - All models are equally weighted

#pred = 0.15*pred1+0.15*pred2+0.15*pred3+0.55*pred4 #Option 2 - Higher weight to the better model

print("Ensemble model RMSE = ", np.sqrt(mean_squared_error(pred,ytest)))Ensemble model RMSE = 5352.145010078119Combined, the random forest model, gradient boost, XGBoost and the Adaboost model do better than each of the individual models.

12.3 XGBoost for classification

data = pd.read_csv('./Datasets/Heart.csv')

data.dropna(inplace = True)

data.head()| Age | Sex | ChestPain | RestBP | Chol | Fbs | RestECG | MaxHR | ExAng | Oldpeak | Slope | Ca | Thal | AHD | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 63 | 1 | typical | 145 | 233 | 1 | 2 | 150 | 0 | 2.3 | 3 | 0.0 | fixed | No |

| 1 | 67 | 1 | asymptomatic | 160 | 286 | 0 | 2 | 108 | 1 | 1.5 | 2 | 3.0 | normal | Yes |

| 2 | 67 | 1 | asymptomatic | 120 | 229 | 0 | 2 | 129 | 1 | 2.6 | 2 | 2.0 | reversable | Yes |

| 3 | 37 | 1 | nonanginal | 130 | 250 | 0 | 0 | 187 | 0 | 3.5 | 3 | 0.0 | normal | No |

| 4 | 41 | 0 | nontypical | 130 | 204 | 0 | 2 | 172 | 0 | 1.4 | 1 | 0.0 | normal | No |

#Response variable

y = pd.get_dummies(data['AHD'])['Yes']

#Creating a dataframe for predictors with dummy varibles replacing the categorical variables

X = data.drop(columns = ['AHD','ChestPain','Thal'])

X = pd.concat([X,pd.get_dummies(data['ChestPain']),pd.get_dummies(data['Thal'])],axis=1)

X.head()| Age | Sex | RestBP | Chol | Fbs | RestECG | MaxHR | ExAng | Oldpeak | Slope | Ca | asymptomatic | nonanginal | nontypical | typical | fixed | normal | reversable | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 63 | 1 | 145 | 233 | 1 | 2 | 150 | 0 | 2.3 | 3 | 0.0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

| 1 | 67 | 1 | 160 | 286 | 0 | 2 | 108 | 1 | 1.5 | 2 | 3.0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

| 2 | 67 | 1 | 120 | 229 | 0 | 2 | 129 | 1 | 2.6 | 2 | 2.0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 |

| 3 | 37 | 1 | 130 | 250 | 0 | 0 | 187 | 0 | 3.5 | 3 | 0.0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 4 | 41 | 0 | 130 | 204 | 0 | 2 | 172 | 0 | 1.4 | 1 | 0.0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 |

#Creating train and test datasets

Xtrain,Xtest,ytrain,ytest = train_test_split(X,y,train_size = 0.5,random_state=1)XGBoost has an additional parameter for classification: scale_pos_weight

Gradients are used as the basis for fitting subsequent trees added to boost or correct errors made by the existing state of the ensemble of decision trees.

The scale_pos_weight value is used to scale the gradient for the positive class.

This has the effect of scaling errors made by the model during training on the positive class and encourages the model to over-correct them. In turn, this can help the model achieve better performance when making predictions on the positive class. Pushed too far, it may result in the model overfitting the positive class at the cost of worse performance on the negative class or both classes.

As such, the scale_pos_weight hyperparameter can be used to train a class-weighted or cost-sensitive version of XGBoost for imbalanced classification.

A sensible default value to set for the scale_pos_weight hyperparameter is the inverse of the class distribution. For example, for a dataset with a 1 to 100 ratio for examples in the minority to majority classes, the scale_pos_weight can be set to 100. This will give classification errors made by the model on the minority class (positive class) 100 times more impact, and in turn, 100 times more correction than errors made on the majority class.

start_time = time.time()

param_grid = {'n_estimators':[25,100,500],

'max_depth': [6,7,8],

'learning_rate': [0.01,0.1,0.2],

'gamma': [0.1,0.25,0.5],

'reg_lambda':[0,0.01,0.001],

'scale_pos_weight':[1.25,1.5,1.75]#Control the balance of positive and negative weights, useful for unbalanced classes. A typical value to consider: sum(negative instances) / sum(positive instances).

}

cv = StratifiedKFold(n_splits=5,shuffle=True,random_state=1)

optimal_params = GridSearchCV(estimator=xgb.XGBClassifier(objective = 'binary:logistic',random_state=1,

use_label_encoder=False),

param_grid = param_grid,

scoring = 'accuracy',

verbose = 1,

n_jobs=-1,

cv = cv)

optimal_params.fit(Xtrain,ytrain)

print(optimal_params.best_params_,optimal_params.best_score_)

print("Time taken = ", (time.time()-start_time)/60, " minutes")Fitting 5 folds for each of 729 candidates, totalling 3645 fits

[22:00:02] WARNING: D:\bld\xgboost-split_1645118015404\work\src\learner.cc:1115: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

{'gamma': 0.25, 'learning_rate': 0.2, 'max_depth': 6, 'n_estimators': 25, 'reg_lambda': 0.01, 'scale_pos_weight': 1.5} 0.872183908045977cv_results=pd.DataFrame(optimal_params.cv_results_)

cv_results.sort_values(by = 'mean_test_score',ascending=False)[0:5]| mean_fit_time | std_fit_time | mean_score_time | std_score_time | param_gamma | param_learning_rate | param_max_depth | param_n_estimators | param_reg_lambda | param_scale_pos_weight | params | split0_test_score | split1_test_score | split2_test_score | split3_test_score | split4_test_score | mean_test_score | std_test_score | rank_test_score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 409 | 0.111135 | 0.017064 | 0.005629 | 0.000737 | 0.25 | 0.2 | 6 | 25 | 0.01 | 1.5 | {'gamma': 0.25, 'learning_rate': 0.2, 'max_dep... | 0.866667 | 0.766667 | 0.9 | 0.931034 | 0.896552 | 0.872184 | 0.05656 | 1 |

| 226 | 0.215781 | 0.007873 | 0.005534 | 0.001615 | 0.1 | 0.2 | 8 | 100 | 0 | 1.5 | {'gamma': 0.1, 'learning_rate': 0.2, 'max_dept... | 0.833333 | 0.766667 | 0.9 | 0.931034 | 0.896552 | 0.865517 | 0.05874 | 2 |

| 290 | 1.391273 | 0.107808 | 0.007723 | 0.006286 | 0.25 | 0.01 | 7 | 500 | 0 | 1.75 | {'gamma': 0.25, 'learning_rate': 0.01, 'max_de... | 0.833333 | 0.766667 | 0.9 | 0.931034 | 0.896552 | 0.865517 | 0.05874 | 2 |

| 266 | 1.247463 | 0.053597 | 0.006830 | 0.002728 | 0.25 | 0.01 | 6 | 500 | 0.01 | 1.75 | {'gamma': 0.25, 'learning_rate': 0.01, 'max_de... | 0.833333 | 0.766667 | 0.9 | 0.931034 | 0.896552 | 0.865517 | 0.05874 | 2 |

| 269 | 1.394361 | 0.087307 | 0.005530 | 0.001718 | 0.25 | 0.01 | 6 | 500 | 0.001 | 1.75 | {'gamma': 0.25, 'learning_rate': 0.01, 'max_de... | 0.833333 | 0.766667 | 0.9 | 0.931034 | 0.896552 | 0.865517 | 0.05874 | 2 |

#Function to compute confusion matrix and prediction accuracy on test/train data

def confusion_matrix_data(data,actual_values,model,cutoff=0.5):

#Predict the values using the Logit model

pred_values = model.predict_proba(data)[:,1]

# Specify the bins

bins=np.array([0,cutoff,1])

#Confusion matrix

cm = np.histogram2d(actual_values, pred_values, bins=bins)[0]

cm_df = pd.DataFrame(cm)

cm_df.columns = ['Predicted 0','Predicted 1']

cm_df = cm_df.rename(index={0: 'Actual 0',1:'Actual 1'})

# Calculate the accuracy

accuracy = 100*(cm[0,0]+cm[1,1])/cm.sum()

fnr = 100*(cm[1,0])/(cm[1,0]+cm[1,1])

precision = 100*(cm[1,1])/(cm[0,1]+cm[1,1])

fpr = 100*(cm[0,1])/(cm[0,0]+cm[0,1])

tpr = 100*(cm[1,1])/(cm[1,0]+cm[1,1])

print("Accuracy = ", accuracy)

print("Precision = ", precision)

print("FNR = ", fnr)

print("FPR = ", fpr)

print("TPR or Recall = ", tpr)

print("Confusion matrix = \n", cm_df)

return (" ")model4 = xgb.XGBClassifier(objective = 'binary:logistic',random_state=1,gamma=0.25,learning_rate = 0.01,max_depth=6,

n_estimators = 500,reg_lambda = 0.01,scale_pos_weight=1.75)

model4.fit(Xtrain,ytrain)

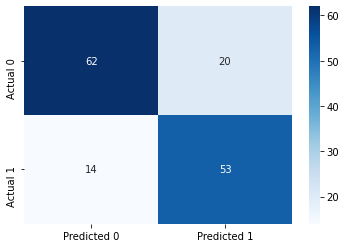

model4.score(Xtest,ytest)0.7718120805369127#Computing the accuracy

y_pred = model4.predict(Xtest)

print("Accuracy: ",accuracy_score(y_pred, ytest)*100)

#Computing the ROC-AUC

y_pred_prob = model4.predict_proba(Xtest)[:,1]

fpr, tpr, auc_thresholds = roc_curve(ytest, y_pred_prob)

print("ROC-AUC: ",auc(fpr, tpr))# AUC of ROC

#Computing the precision and recall

print("Precision: ", precision_score(ytest, y_pred))

print("Recall: ", recall_score(ytest, y_pred))

#Confusion matrix

cm = pd.DataFrame(confusion_matrix(ytest, y_pred), columns=['Predicted 0', 'Predicted 1'],

index = ['Actual 0', 'Actual 1'])

sns.heatmap(cm, annot=True, cmap='Blues', fmt='g');Accuracy: 77.18120805369128

ROC-AUC: 0.8815070986530761

Precision: 0.726027397260274

Recall: 0.7910447761194029

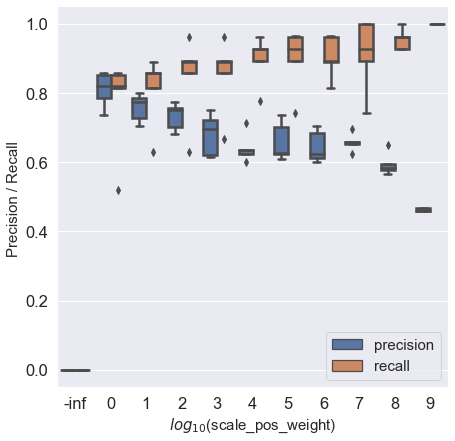

If we increase the value of scale_pos_weight, the model will focus on classifying positives more correctly. This will increase the recall (true positive rate) since the focus is on identifying all positives. However, this will lead to identifying positives aggressively, and observations ‘similar’ to observations of the positive class will also be predicted as positive resulting in an increase in false positives and a decrease in precision. See the trend below as we increase the value of scale_pos_weight.

12.3.1 Precision & recall vs scale_pos_weight

def get_models():

models = dict()

# explore 'scale_pos_weight' from 0.1 to 2 in 0.1 increments

for i in [0,1,10,1e2,1e3,1e4,1e5,1e6,1e7,1e8,1e9]:

key = '%.0f' % i

models[key] = xgb.XGBClassifier(objective = 'binary:logistic',scale_pos_weight=i,random_state=1)

return models

# evaluate a given model using cross-validation

def evaluate_model(model, X, y):

# define the evaluation procedure

cv = StratifiedKFold(n_splits=5, shuffle=True, random_state=1)

# evaluate the model and collect the results

scores_recall = cross_val_score(model, X, y, scoring='recall', cv=cv, n_jobs=-1)

scores_precision = cross_val_score(model, X, y, scoring='precision', cv=cv, n_jobs=-1)

return list([scores_recall,scores_precision])

# get the models to evaluate

models = get_models()

# evaluate the models and store results

results_recall, results_precision, names = list(), list(), list()

for name, model in models.items():

# evaluate the model

scores = evaluate_model(model, X, y)

scores_recall = scores[0]

scores_precision = scores[1]

# store the results

results_recall.append(scores_recall)

results_precision.append(scores_precision)

names.append(name)

# summarize the performance along the way

print('>%s %.2f (%.2f)' % (name, np.mean(scores_recall), np.std(scores_recall)))

# plot model performance for comparison

plt.figure(figsize=(7, 7))

sns.set(font_scale = 1.5)

pdata = pd.DataFrame(results_precision)

pdata.columns = list(['p1','p2','p3','p4','p5'])

pdata['metric'] = 'precision'

rdata = pd.DataFrame(results_recall)

rdata.columns = list(['p1','p2','p3','p4','p5'])

rdata['metric'] = 'recall'

pr_data = pd.concat([pdata,rdata])

pr_data.reset_index(drop=False,inplace= True)

#sns.boxplot(x="day", y="total_bill", hue="time",pr_data=tips, linewidth=2.5)

pr_data_melt=pr_data.melt(id_vars = ['index','metric'])

pr_data_melt['index']=pr_data_melt['index']-1

pr_data_melt['index'] = pr_data_melt['index'].astype('str')

pr_data_melt.replace(to_replace='-1',value = '-inf',inplace=True)

sns.boxplot(x='index', y="value", hue="metric", data=pr_data_melt, linewidth=2.5)

plt.xlabel('$log_{10}$(scale_pos_weight)',fontsize=15)

plt.ylabel('Precision / Recall ',fontsize=15)

plt.legend(loc="lower right", frameon=True, fontsize=15)>0 0.00 (0.00)

>1 0.77 (0.13)

>10 0.81 (0.09)

>100 0.85 (0.11)

>1000 0.85 (0.10)

>10000 0.90 (0.06)

>100000 0.90 (0.08)

>1000000 0.90 (0.06)

>10000000 0.91 (0.10)

>100000000 0.96 (0.03)

>1000000000 1.00 (0.00)